Explainable time series classification with X-ROCKET

Felix Brunner

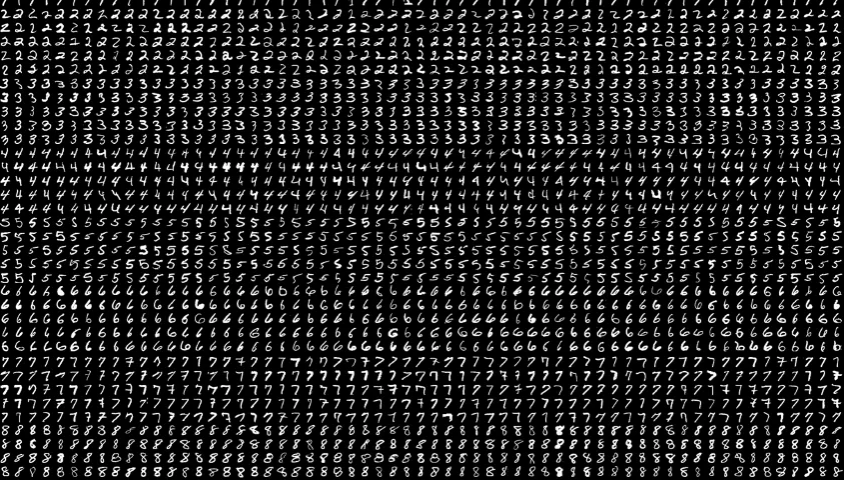

With the lasting hypes in the domains computer vision and natural language processing, time series are frequently overlooked when talking about impactful applications of machine learning. However, time series data is ubiquitous in many domains and predictive modeling of such data often carries significant business value. One important task in this context is time series classification, which is attracting rising levels of attention due to its diverse applications in domains such as finance, healthcare, and manufacturing. Numerous techniques have been developed to tackle the unique challenges posed by time series data, where increased capacity often comes at the expense of interpretability and computational speed. While the race for a common state-of-the-art embedding model for time series continues, the R and O m C onvolutional KE rnel T ransform (ROCKET) of Dempster et al. (2020) has gained significant attention as a simple yet powerful encoder model. In this series of articles, I will introduce the model’s underlying ideas, and show an augmentation that adds explainability to its embeddings for use in downstream tasks. It consists of three parts: This first part provides background information on time series classification and ROCKET. The second part sheds light on the inner workings of the X-ROCKET implementation . The third part takes us on an exploration of X-ROCKET’s capabilities in a practical setting. The fundamentals of time series classification A common task in the time series domain is to identify which of a set of categories an input belongs to. For example, one might be interested in diagnosing the state of a production machine given a sequence of sensor measurements or in predicting the health of an organism from biomedical observations over a time interval. Formally, the problem can be described as follows: Given a sequence of observations at a regular frequency, calculate the probabilities of the input belonging to one of a fixed set of classes. The input data for each example is usually structured as a 1D-array of numerical values in the univariate case, or a 2D-array if there are multiple channels. A prediction model then calculates class probabilities as its output. In this context, models are commonly composed of an encoder block that produces feature embeddings, and a classification algorithm that processes the embeddings to calculate the output probabilities, as schematically indicated in the diagram below. Illustration of a time series classification pipeline (drawn in excalidraw ). While classification algorithms in machine learning have matured, it is less clear how to extract suitable features from time series inputs. Traditional time series approaches, such as Dynamic Time Warping and Fourier transforms, have shown promise in handling time series similarity and feature extraction. More recently, with the advent of deep learning, Recurrent Neural Networks (RNNs) and Convolutional Neural Networks (CNNs) have emerged as dominant methodologies to capture sequential patterns and spatial features, respectively. Finally, Transformer-based models with temporal attention have shown promise to further advance the field of time series classification in the most up-to-date research (e.g. Zerveas et al. (2021) ). Despite these advancements, there are still substantial challenges to harvesting time series data. Where images or texts are immediately interpretable by our human brains in most cases, examining the fluctuations in time series recordings can be unintuitive to the extent that it is impossible to assign class labels in the first place. In particular, it is often unclear how informative specific time series recordings are in the first place, which is aggravated by the widespread prevalence of noise. Hence, it is an open question how the data should be processed to extract potential signals from an input. Additionally, unlike images, time series often vary in terms of length, so methods for feature extraction should be able to summarize inputs in fixed-dimensional embedding vectors independent of input size. Finally, time series data may or may not be stationary, which potentially has adverse effects on prediction quality. What works? So what is the go-to-model for time series classification? Alas, the answer is not that simple. This is mainly due to the lack of widely accepted benchmarks, which makes it impossible to fairly compare the numerous and diverse models proposed in the literature. But even if one wanted to construct such a unified benchmark dataset, it is not clear what it would contain to be representative of the diversity that is time series. In other words, measuring a model’s performance on low-frequency weather data might not be a good indication of its success with high-frequency audio files or DNA sequences. To get a sense of how different data in the time series domain can be, compare for example the visualizations of examples from various datasets in Middlehurst et al. (2023) below. Moreover, there is an important distinction between univariate and multivariate time series, that is, if one or more different variables are being measured simultaneously. Unfortunately, evidence is particularly thin for the multivariate case, which is the more relevant case in many practical applications. Visualizations of examples from various time series datasets from Middlehurst et al. (2023) . Having said that, there are a few resources that attempt to compare different methods in the domain of time series classification. On the one hand, the constantly updated time series classification leaderboard on Papers with code provides scores for a few models on selected datasets. On the other hand, members of the research group behind the time series classification website have published papers (compare, e.g., Bagnall et al. (2017) , Ruiz et al. (2021) , and Middlehurst et al. (2023) ) that conduct horse races between time series classification methods on their time series data archive. While the former favors a variety of RNNs and CNNs on its benchmarks, non-deep learning methods such as ROCKET fare particularly well on the latter. Therefore, it would be presumptuous to declare a general winner, and the answer is a resolute “well, it depends”. In many cases, there are additional considerations besides pure performance that tip the scales when it comes to model choice. With limited availability of training data, more complex and capable models that require extensive training are often out of place. Ideally, there would be a pre-trained encoder model that could be used out-of-the-box to extract meaningful patterns from any time series input without additional training and could be fine-tuned to a specific use case with moderate effort as is the case in computer vision or NLP. Hence, there is often a trade-off between performance and computational efficiency. Moreover, practical applications often require predictions to be explainable; that is, domain experts often demand to understand what features of the input time series evoke a prediction. This is particularly true for sensitive use cases such as in health care or for autonomous driving. Therefore, choosing an explainable model is often crucial for the suitability and credibility of machine learning techniques. Team ROCKET to the rescue One relatively simple modeling approach for time series embeddings is the so-called ROCKET, short for RandOm Convolutional KErnel Transform. This methodology was first introduced in Dempster et al. (2020) and has been further developed in subsequent research papers. Noteworthy variants here are the MiniROCKET of Dempster et al. (2021) , the MultiROCKET of Tan et al. (2022) , and HYDRA of Dempster et al. (2023) . A main advantage over more complex methods is that ROCKET models are very fast in terms of computation and do normally not require any training to learn an informative embedding mapping, while predictive performance is on par with state-of-the-art models. For example, Ruiz et al. (2021) find that training time is orders of magnitude faster for ROCKET compared to other time series classification algorithms that achieve similar accuracy (see image below). This difference mainly stems from the fact that ROCKET encoders scan an input for a pre-defined set of possibly dispensable patterns and then only let the classifier learn which ones matter, instead of learning everything from scratch. Model comparison chart taken from Ruiz et al. (2021) . The main idea behind ROCKET encodings banks on the recent successes of Convolutional Neural Networks (CNNs) and transfers them to feature extraction in time series datasets. In contrast to most CNNs in the image domain, however, the architecture does not involve any hidden layers or other non-linearities. Instead, a large number of preset kernels is convolved with the input separately, resulting in a transformation that indicates the strength of occurrences of the convolutional patterns in different parts of the input sequence. This process is repeated with various dilation values, which is the same as scanning at different frequencies. As for the choice of filters, the original paper suggests using random kernels, while later installments use a small set of deterministic patterns. Next, the high-dimensional outputs of this step are pooled across time via proportion of positive values pooling (PPV), that is, by counting the times when the convolutional activations surpass channel-wise bias thresholds which can be learned from representative examples. As a result, the output of the encoder is a feature vector that summarizes the input time series independent of its length. The transformed features can then serve as the input to any prediction algorithm that can deal with feature redundancy. For example, the original work advises to use simple algorithms like regularized linear models. For a more detailed explanation of the transformations, please refer to the original authors’ paper or to the more detailed descriptions in the second installment of this article. So if ROCKET achieves state-of-the-art performance while being computationally much more efficient than most methods, what could possibly go wrong? Well oftentimes, performance is not everything… Coming back to the explainability requirements that machine learning models often encounter in practice, is ROCKET a suitable model? As it comes, the answer is no. However, the algorithm requires only slight changes to attach meaning to its embeddings. In the second part , I will demonstrate how this can be achieved by means of a slightly altered implementation, the explainable ROCKET — or short, X-ROCKET. References Bagnall, A., Lines, J., Bostrom, A., Large, J., & Keogh, E. (2017). The great time series classification bake off: a review and experimental evaluation of recent algorithmic advances. Data mining and knowledge discovery , 31, 606–660. Dempster, A., Petitjean, F., & Webb, G. I. (2020). ROCKET: exceptionally fast and accurate time series classification using random convolutional kernels. Data Mining and Knowledge Discovery , 34(5), 1454–1495. Dempster, A., Schmidt, D. F., & Webb, G. I. (2021, August). Minirocket: A very fast (almost) deterministic transform for time series classification. In Proceedings of the 27th ACM SIGKDD conference on knowledge discovery & data mining (pp. 248–257). Dempster, A., Schmidt, D. F., & Webb, G. I. (2023). Hydra: Competing convolutional kernels for fast and accurate time series classification. Data Mining and Knowledge Discovery , 1–27. Middlehurst, M., Schäfer, P., & Bagnall, A. (2023). Bake off redux: a review and experimental evaluation of recent time series classification algorithms. arXiv preprint arXiv:2304.13029 . Ruiz, A. P., Flynn, M., Large, J., Middlehurst, M., & Bagnall, A. (2021). The great multivariate time series classification bake off: a review and experimental evaluation of recent algorithmic advances. Data Mining and Knowledge Discovery , 35(2), 401–449. Tan, C. W., Dempster, A., Bergmeir, C., & Webb, G. I. (2022). MultiRocket: multiple pooling operators and transformations for fast and effective time series classification. Data Mining and Knowledge Discovery , 36(5), 1623–1646. Zerveas, G., Jayaraman, S., Patel, D., Bhamidipaty, A., & Eickhoff, C. (2021, August). A transformer-based framework for multivariate time series representation learning. In Proceedings of the 27th ACM SIGKDD conference on knowledge discovery & data mining (pp. 2114–2124). This article was created within the “AI-gent3D — AI-supported, generative 3D-Printing” project , funded by the German Federal Ministry of Education and Research (BMBF) with the funding reference 02P20A501 under the coordination of PTKA Karlsruhe.

.jpg)

.jpg)

.jpg)

.jpg)