What is a Convolutional Neural Network? - Explained

Mark Bugden (PhD)

Neural Networks, particularly Convolutional Neural Networks (CNNs), have surged in popularity over the past few years. They are ubiquitous in many image recognition and processing tasks and have also found applications in several areas not based on image analysis.

In this article, we will give an introduction to CNNs, and answer some of those questions you have been too embarrassed to ask your IT department.

Note: If you are interested in a 30min conversation with our dedicated Computer Vision contact person regarding the topics of CNNs and Computer Vision, please take a look at our free Computer Vision Talk offering.

Are CNNs machine-learning or deep-learning?

The short answer to this question is that a CNN is both. To clarify this, it’s helpful to make clear what these terms really mean.

Machine learning is a field of artificial intelligence which focuses on models and algorithms that learn to make predictions based on data, without being explicitly programmed to make those decisions. The models learn patterns and relationships by being trained on existing data, and then apply that knowledge to make predictions in new situations.

Deep learning, on the other hand, is a branch of machine learning which is focused on the development and use of Artificial Neural Networks (ANNs). Artificial Neural Networks were originally inspired by the connections of neurons inside the human brain and can be trained to learn hierarchical representations of data. Each neural network consists of collections of neurons called layers, and these layers are connected to each other to form an intricate and complicated web. A neural network consisting of many layers of neurons is referred to as ‘deep’, and this is where the term ‘deep-learning’ originates.

A Convolutional Neural Network is a type of neural network where at least some of the layers consist of convolutional layers. We will talk more about convolutional layers later in this blog post, but for now, you can think of them as feature extractors - they take some input, apply filters to the input in the hopes of extracting interesting features, and then pass those features onto the next layer of the neural network.

Are CNNs supervised or unsupervised?

In order for machine learning models, and in particular artificial neural networks, to be able to make accurate predictions, they first require training. CNNs are usually trained through a process known as supervised learning.

To understand supervised learning, suppose that you have a large collection of images of animals, and you want to train a neural network to predict whether the animal in an image is a cat or a dog. In supervised learning, you start by giving the network images that are already labelled as “cat” or “dog”. Each time the network sees an image, it makes a prediction of “cat” or “dog”. The network then compares its prediction to the label for that image, and determines whether the prediction was correct or incorrect. Whenever the network makes an incorrect prediction, it adjusts itself (i.e. it changes the parameters of the neural network) so that it will make better predictions in the future - in other words, the network learns from its mistakes.

What are CNNs used for?

CNNs are typically used in computer vision tasks - that is, tasks involved in the processing and analysis of images. Common tasks in this area include:

Image classification: CNNs are used to classify images into predefined categories or classes based on their visual content. This enables applications like object recognition, scene classification, and content-based image retrieval.

Object detection: CNNs are used to detect, localize, and classify objects within an image. The standard method of object classification is bounding-box detection, which provides the coordinates of a box surrounding the object, as well as the classification of the object inside the box.

Image segmentation: CNNs are used to partition an image into regions or segments and assign labels to each segment. This could be used, for example, to identify cancerous cells within a medical scan, or to remove a background in image processing.

Facial recognition: CNNs are used to identify and verify individuals based on facial features, commonly employed in security systems, social media tagging, and authentication mechanisms.

Style transfer: CNNs can transfer the artistic style of one image to another, so you could, for example, have your family portrait done in the style of Picasso.

Super-resolution: CNNs can be used to enhance the resolution of images, which has a wide variety of different applications.

In addition to these common use cases, CNNs can also be used in a variety of different areas:

Natural Language Processing (NLP): CNNs can be applied to text classification tasks by treating text as a 1-dimensional signal and using 1D convolutions over word embeddings.

Audio and Sound analysis: CNNs have been used in audio classification by converting the audio to spectrographic information, and then using CNN

Time series data analysis: Time series data, such as financial or biometric data, can be analysed using CNNs to make predictions about things like stock prices and optimal exercise regimes.

Graph and network analysis: CNNs can classify nodes in a graph, which is useful for tasks like identifying influential users in social networks.

A simplified example of a CNN in practice

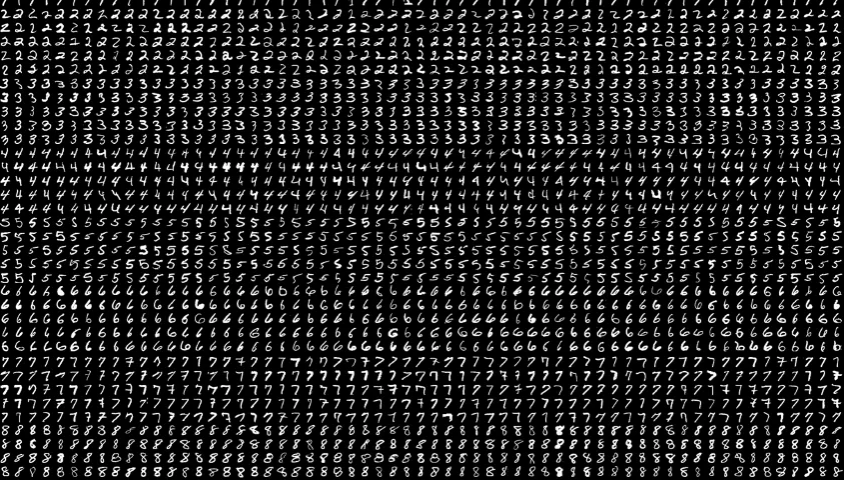

To see an example of a CNN in practice, let us take a look at the (very common) exercise of recognizing handwritten numbers. Since all machine learning projects require data on which to train models, we will use the MNIST dataset. MNIST is a large dataset of handwritten digits commonly used for training and testing in various Machine Learning contexts. The dataset consists of 60000 training images and 10000 testing images.

Each image in this dataset is 28 pixels wide and 28 pixels high and is represented in Python as a 28x28 array. Each element of this array, i.e. each pixel, is assigned an integer between 0 and 255 corresponding to how bright that pixel is - 0 represents black and 255 represents white.

The first layer of the neural network is the input layer, where data is fed into the network, and consists of 784 nodes corresponding to the flattened 28x28 image. After the input layer, there are convolutional layers which perform convolutional operations to extract local features from the input image. These are often combined with activation functions, which allow the network to learn more complex relationships in the data, pooling layers, which allow up- and down-sampling of the data, and fully connected layers, which process the high-level features of the previous layers and learn to classify or perform other tasks. Finally, we have the output layer, which in this case consists of the 10 output nodes, corresponding to the different predictions that the neural network can make (the digits 0-9).

.svg)

When an image is given to the neural network, the pixel values of the image get stored as numeric values in the nodes of the first layer. These values then get modified by the parameters of the neural network (the weights and biases) before being passed onto the next layer. This continues until the network produces values for the final layer - the node in the final layer with the maximum value is interpreted as the predicted class. During training, this prediction is compared to the ground truth, and if the prediction differs from the ground truth, the weights are adjusted to improve the prediction via a process known as backpropagation. Over many iterations of training, the network learns features of the training data, i.e. it improves the weights and biases of the neural network to make more accurate predictions.

To show how the predictions of a model change with training, we’ve trained a CNN on the MNIST dataset and evaluated the model after training on only small fractions of the dataset. After various stages of training, we asked the model to predict the number from the image below. The bars in the graph depict the model's best estimation of the number to which the image corresponds - the higher the bar, the more confident the model is that it has the correct prediction. After training on 0.1% of the images, each prediction is equally likely, meaning that the predictions are more or less random - at this stage, the model has not had the chance to learn any features from the data. As the model trains on more of the data, the predictions become more confident and converge on the correct answer (7).

How is the performance of a CNN measured?

Once a model has been trained so that it is able to make accurate predictions on the training dataset, you can then give the model images it has never seen before. The model has learned features such as shape, colour, and textures from the training data, and is able to make a prediction for the new image despite never having seen the image before. The training images and the unseen images are referred to as the train set and the test set respectively. In the simplest terms, the performance of a model is defined by the accuracy of its predictions on the test data.

What Does a Convolutional Layer Truly Entail?

To understand what a convolution layer inside a CNN is, we first need to understand what the term convolution means. The mathematical definition of convolution:

is a bit difficult to understand unless you have a background in mathematics, so we will focus on convolutions as they are typically used in the context of CNNs. Of course, the two meanings are not really different, and the type of convolutions that we are using in CNNs are a type of convolution in the mathematical sense of the word, but for simplicity we won’t discuss how they are related. If you are interested in understanding the mathematical background behind convolutional layers, 3Blue1Brown has a fantastic explanation on his Youtube channel.

Let us first start with the simplest example of a convolution in image processing. We begin with an image, which we will take from the MNIST dataset we have already seen. We then require a convolutional filter, which in our case we will take to be a 3x3 grid of numbers. The actual numbers here are not so important (we will have more to say about them later), so for now let us assume that they are all equal, say 0.1. To apply the convolutional filter to a single pixel in the image, we superimpose the filter over the image so that it covers that pixel and its surrounding pixels. We then take a dot product by multiplying the values of the filter with the values of the image, summing up these products, and using this value as the value of the corresponding pixel in the new image. We then apply this process to every pixel in the original image, and as we move the filter across the original image we create a new image in the process.

We can implement this in python code fairly simply,

import numpy as np

# Define a 3x3 convolutional filte

conv_filter = np.array([[0.1, 0.1, 0.1],

[0.1, 0.1, 0.1],

[0.1, 0.1, 0.1]])

# Apply convolution operation

def apply_convolution(image, filter):

image_height, image_width, _ = image.shape

filter_height, filter_width = filter.shape

output_height = image_height - filter_height + 1

output_width = image_width - filter_width + 1

output = np.zeros((output_height, output_width))

for i in range(output_height):

for j in range(output_width):

output[i, j] = np.sum(image[i:i+filter_height, j:j+filter_width] * filter)

return outputOf course, we usually don’t bother coding this in by hand - there are existing libraries that handle all of this for us, but it is nice to know that we could manually do these calculations if we needed to.

In our case the filter consists of all equal numbers, so the effect of the filter is to average out the values of the surrounding pixels, which creates a blurring effect in the filtered image. We can achieve other image processing effects by choosing different filters - a filter with negative values around the outside and a large value in the center creates a sharpening effect, whilst negative values on the left and positive values on the right produce an image that is bright whenever there are changes in brightness when moving from left to right. In other words, this filter detects vertical lines in the original image. More generally, applying a convolutional filter can be used to automatically detect features of an image.

Now that we understand how convolution works, the concept of a convolutional layer in a neural network is quite simple. A convolutional layer consists of a specified number of filters that takes some input image, applies the filters, and produce the output filtered images - the filters act to detect features of the input image such as vertical and horizontal lines. Since convolutional layers detect features from an input image, we can take the output of a convolutional layer and use it as the input of a new convolutional layer, stacking layers on top of each other, so that the model can identify features within features. The fascinating thing about CNNs is that the filters are not manually coded into the model by hand - they are precisely the weights of the neural network that get updated automatically during training. The model automatically learns which features it needs to detect based on the data.

Conclusion

Convolutional Neural Networks are extremely versatile tools and have found widespread use in an impressive array of applications. Together with fully-connected neural networks and recurrent neural networks, CNNs form a core part of many modern machine-learning models. If you would like to know more about how we have used CNNs at dida, check out the project descriptions for the following projects:

Frequently Asked Questions

What are some common usages and variations of a CNN?

LeNet-5: One of the first CNN architectures, used to perform handwritten digit recognition and other classification tasks.

ResNet: Residual networks include skip connections that allow the training of very deep models.

U-Net: A CNN which begins with a contracting network, followed by an expanding network. The “down and then up” architecture is often depicted as a U, whence the model gets its name. Useful for image segmentation tasks.

YOLO: YOLO and its variations are real-time detection networks that focus on speed and efficiency.

DenseNet: A CNN where each layer receives input from ALL previous layers, not just the most recent. This encourages the model to reuse features and reduces the vanishing gradient problem.

Are there any Python packages that will allow me to more easily use CNNs?

Yes, It is very easy to implement CNNs in Python, and both Tensorflow and PyTorch have simple tutorials that will help get you started in creating and training your first CNN. In addition, TorchVision (a computer-vision package designed for use with PyTorch) has an extensive collection of models that have been pre-trained on large datasets of labeled images and can often be used out-of-the-box on many image classification and segmentation problems.

Contact

If you would like to speak with us about this topic, please reach out and we will schedule an introductory meeting right away.