Data-centric Machine Learning: Making customized ML solutions production-ready

David Berscheid

By 2021, there is little doubt that Machine Learning (ML) brings great potential to today’s world. In a study by Bitkom, 30% of companies in Germany state that they have planned or least discussed attempts to leverage the value of ML. But while the companies’ willingness to invest in ML is rising, Accenture estimates that 80% – 85% of these projects remain a proof of concept and are not brought into production.

Therefore at dida, we made it our core mission to bridge that gap between proof of concept and production software, which we achieve by applying data-centric techniques, among other things.

In this article, we will

see why many ML Projects do not make it into production,

introduce the concepts of model- and data-centric ML, and

give examples how we at dida improve projects by applying data-centric techniques.

Model-centric ML: Why many industries struggle to leverage the potential of ML in production

Model-centric ML describes ML solutions that mainly focus on the optimization of model architectures and its (hyper)parameters. ML researchers are working hard to find better and better neural network architectures to push evaluation metrics on common benchmark datasets like mnist or ImageNet.

In many industries, ML engineers implement powerful state-of-the-art models into their applications and run experiments on different architectures and hyperparameters. Graphic 1 depicts a typical model-centric ML process. Note the iterative nature of model and hyperparameter optimization, whereas data collection, preprocessing or deployment remain one-time steps.

This model-centric approach works well for some industries, such as the ad industry. There, companies like Google or Facebook possess huge amounts of user data, usually available in a standardised format, with immediate feedback opportunities and large ML departments.

But for most other industries that is not the case. Think of industries like manufacturing, engineering or healthcare. These typically face the following three problems:

1. Need for highly customized solutions

In contrast to Google’s ad scenario, a manufacturing company with multiple products is not able to apply only one ML system to detect production errors in its various products. Instead, it would need a separately trained ML model for each manufactured product.

While Google can afford that a whole ML department works on every tiny optimization problem, a manufacturing company with the need for multiple ML solutions cannot follow Google’s blueprint with respect to the magnitude of their ML department.

2. Limited number of data points

More often than not, industries do not have millions of data points. Instead, they usually have to work with small datasets (i.e. 10^2 – 10^3 relevant data points), which - for production standards - are typically prone to unsatisfactory results, if their ML solution is too model-centric.

Imagine the use case, where a wind turbines company would like to develop a predictive maintenance solution, that is able to detect wear on its wind turbines by analyzing drone images. Apart from thousands of images with ‘healthy’ wind turbines, the sample size of images that show actual wear and tear on its surface might lie around 100, and thus might be 0.0001% of an ad company’s sample size.

It becomes clear, that a model-centric approach focusing on the optimization of the model architecture and not on the weak spot – the limited number of relevant images – would not yield satisfying results.

3. Large gap between proof of concepts and production software

In 2019 research papers were published that claim to be more accurate in diagnosing tumor in an early stage than trained radiologists would be. But why doesn’t every hospital make use of such great ML systems already to optimize its diagnoses?

It’s because of a wide gap between proof of concept and hospital production software. If every hospital used the same machines to create its patients’ lung scans and every hospital would save these images in a central and accessible place, then chances are that the production software would work as intended.

But in reality, this is not the case. Hospitals use different machines, bought from different manufacturers, built in different years and consequently with different scan quality. Additionally, data security is a highly important issue for hospitals and healthcare, which prohibits to simply upload all scans to a centrally saved system.

Therefore, hospitals usually have to overcome a wide gap, where each hospital might have to use its own ML system to fit to its own technical and organizational requirements. Then, model-centric ML often falls short.

Companies that face some of the above-mentioned problems and have made already attempts to leverage ML for their business would probably agree, that purely model-centric ML usually does not convince enough to be put into production, which brings us to data-centric ML.

Data-centric ML: Chances for industries with customized needs

Data-centric ML refers to techniques related to the optimization of data within ML projects. While improving data-related aspects should not be something new for ML scientists, Andrew Ng, a leading ML technologist, is advertising efforts to do so.

In a recent talk he states, that from a sample of recent ML-related publications, 99% of the papers were model-centric papers and only 1% of examined papers was focusing on data.

This extreme ratio shows that ML research heavily focuses on the optimization of ML models, whereas data-related aspects are often neglected. Thus, advocates of data-centric ML are pledging for more solutions regarding the optimization of data in ML workflows.

As graphic 2 depicts, the previous model-centric approach to ML is extended by iterating back from deployment to the stage of data collection and data pre-processing. Especially for situations with a need for customized solutions, little data, and wide proof-of-concept-to-production-gaps the following aspects of data-centric ML can be impactful:

1. Iterative evaluations

While in classical software development projects the process might end with the deployment of a functionality (not taking into account update cycles), in data-centric ML projects this is not the case. During production usage, the ML model will see data that it has not seen before, which will also inevitably differ from the algorithm’s training data.

Therefore, evaluation of the model’s quality should be an iterative process instead of a one-time stage. Timely feedback from production systems for example allows to recognize and react to distributional data drifts, and if wanted, pose as a prerequisite for online learning.

2. Data collection

In a data-centric approach, data collection is also an iterative process, especially for companies that do not have millions of data points. This is an obvious technique to increase the performance of ML systems, while at the same time it is important to say, that quality is often more important than purely quantity of data.

3. Data label quality

While iterative data collection can help to increase the outcome, so does high quality data labeling. Data-centric ML argues that a huge number of badly labeled images or texts lead to worse results than fewer but accurate ones.

Having multiple labelers furthermore helps to spot inconsistencies in labels. A powerful best practice is to find the inconsistent labels and redefine the labeling instructions especially for these cases. The most important aspect of data labeling though is to include domain knowledge, which is often missing if data labeling is being outsourced or not handled with the appropriate care.

4. Data augmentation

Data augmentation summarizes techniques to increase the number of data points in your sample. Existing images for example can be flipped, rotated, zoomed, or cropped to create additional ones. Similar techniques exist in the NLP context. Data-centric ML uses data augmentation, especially to increase the amount of relevant data points, i.e. the number of defective production parts.

5. Integration of domain knowledge

In data-centric ML, domain knowledge has a great value. Often, ML engineers, labelers and other ‘domain outsiders’ cannot identify subtle differences, whereas domain experts can. While it is intuitive to include these experts into the development processes, in reality this exchange is often missing. The result is an ML system that would have provided higher performance if more domain knowledge had been accessible, and a higher probability that it remains a proof of concept, as results would not convince in a production setting.

Data-centric ML at dida: Examples and experiences

While in theory the above-mentioned aspects sound reasonable and maybe even obvious, let’s take a look at some examples from previous projects at dida to see how we typically improve performances through data-centric techniques. You might quickly realize that quite often it is not as trivial as it first seems.

Iterative evaluations detect data drifts

For Enpal, a solar panel company, we were creating a ML solution that could estimate the amount and position of solar panels fitting on a given roof via satellite imagery. Through iterative evaluations, we were able to detect data drift in production, as the images that were fed to the production system started to differ from the training data.

Graphic 3 shows four situations which would have led to a poor performance, if we would not have had constant evaluation, like poor contrast of roof sides, very small objects on the roof (which would not allow to place a solar panel there), low spatial resolution or rare roof shapes (like connected roofs).

Keeping control of annotation process ensures label quality

Again, using the solar panel example, it is easy to show the differences in data labels and their effect. If you compare image 1 and 2, you can see that the labeling person of 2 decided to not mark the small obstacle on the middle roof and not the triangular-shaped obstacle on the lower roof. In image 3 it looks like the person drew the labels by hand, which results in uneven lines and round forms instead of clear edges. And then there is person 4 – we have no idea, what this person did ;)

Jokes aside, one can see that the quality in labels can differ a lot. How should a ML model be able to perform well if the input data is weak or very inconsistent? Is it clear to the labelers what a high quality label looks like in contrast to an average one?

For this reason, we at dida typically do not outsource this step, but have our own employees label the data in our projects (at least until we are convinced the labeling scheme is well-tested and comes with transparent instructions and labeling quality standards). This way we have more control over the process and can adjust the labeling scheme if necessary.

Attention to finicky details pays off

With the example of a previous project of a large chemical corporation, whose name we cannot share, we would like to show that creating labels in not always a trivial task. Graphic 5 depicts a part of an invoice. The goal was to automatically detect the relevant information and import it in a structured form into the respective ERP system.

Non-trivial regarding the labels is the decision how much information a label should contain. Is ‘Inhalt 500g’ or simply ‘500g’ the better label? Even more complicated is the decision if ‘Anzahl 1’ and ‘Menge 2’ should be labeled as the same class, as they can be seen as synonyms for quantity or two different classes? Through experiences from our previous NLP projects and experiments that we ran, results were most convincing when we chose the labeling scheme that you can see in the graphic – but it was definitely not straight forward.

This touches a very general point already mentioned above: in most real world ML projects the data to be used for training and testing is not already "just there", as it is in Kaggle contests or benchmarking datasets, but depends upon a number of decisions to be taken previously. Although for some of them (like possibly the above example) it seems pedantic to even consider and discuss them, we found that a lot hinges on these details.

Help from domain experts is vital

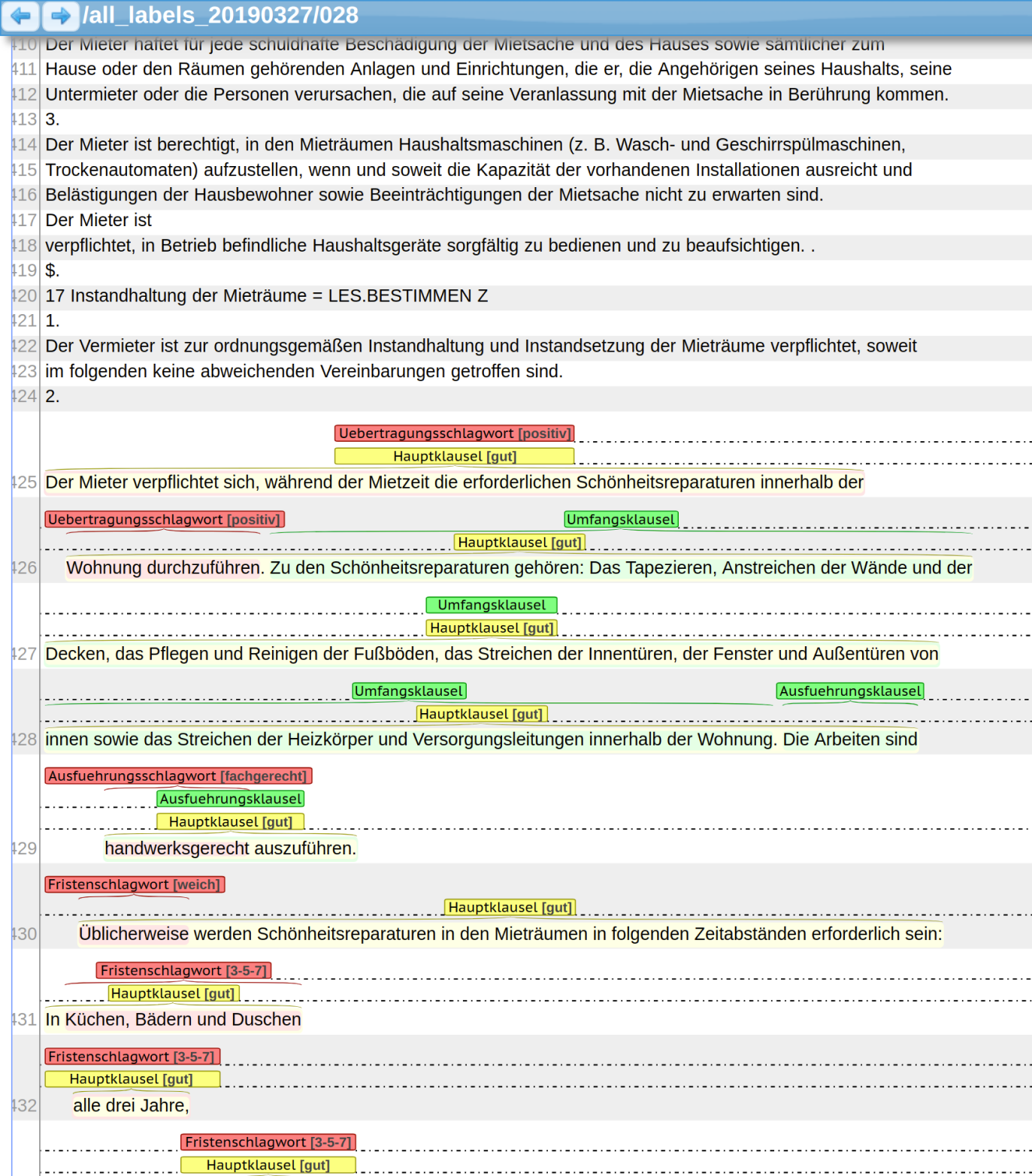

For our client Mieterengel, an online tenant protection club, we were building a ML solution that is able to analyze tenancy contracts, examine if certain paragraphs are legally valid and prepare an answer that a lawyer of Mieterengel can then send to their members. This project is a very good example, why domain knowledge is needed in ML projects.

At dida, we are experts in ML but have little knowledge about law issues. Therefore, we scheduled regular joint workshops with tenancy lawyers to develop and constantly evaluate our labeling scheme. Multiple times in our joint workshops, the law experts could hint us to small aspects in the contract labels that could still be improved in order to stick as closely as possible to common legal logic.

Conclusion

Data-centric ML techniques are a way to increase the performance of ML solutions. They pose a valuable complement to the popular and sophisticated optimizations of algorithms, architectures and hyperparameters.

While for us at dida data-centric work is not this new trend, as which it is framed by some members of the ML community, but rather common practice, it is certainly important. Techniques for improving the data quality during projects has often proved essential for dida, which is why we appreciate a rising awareness for data-centric techniques and are looking forward to new approaches that might follow from this new attention.

If you would like to know more about data-centric ML or find out, which customized solutions might be relevant for your business, feel free to contact here or send us a mail to info@dida.do.

Contact

If you would like to speak with us about this topic, please reach out and we will schedule an introductory meeting right away.