What is a Convolutional Neural Network? - Explained

Mark Bugden (PhD)

Mark Bugden (PhD)

Tiago Sanona

Edit Szügyi

Emilius Richter

Madina Kasymova

Felix Brunner

Frank Weilandt (PhD)

Konrad Mundinger

.jpg)

Fabian Gringel

Moritz Besser

Dmitrii Iakushechkin

Fabian Dechent

Augusto Stoffel (PhD)

Tiago Sanona

Johan Dettmar

Matthias Werner

.jpg)

William Clemens (PhD)

William Clemens (PhD)

Fabian Gringel

William Clemens (PhD)

Lorenzo Melchior

Matthias Werner

Nelson Martins (PhD)

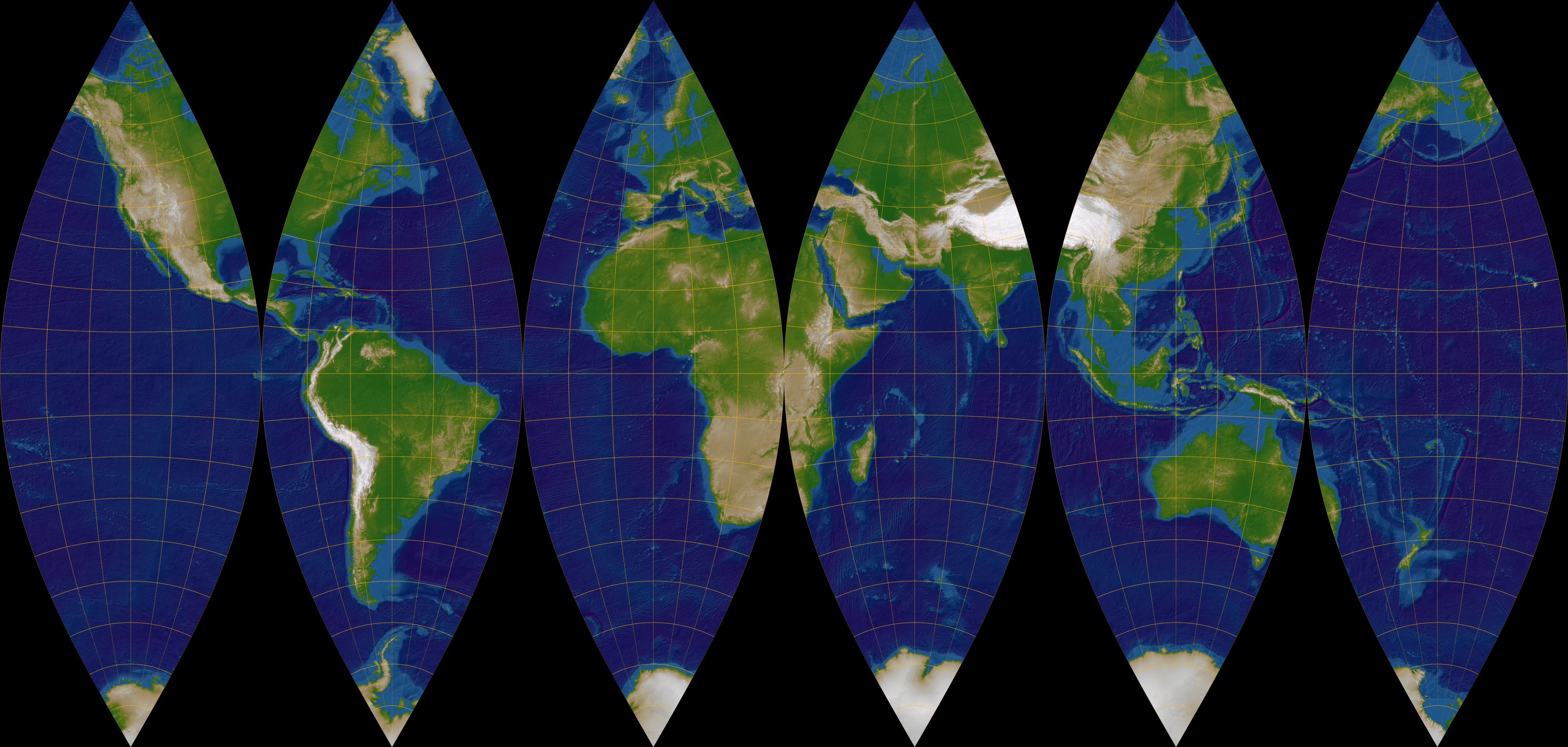

The HEGEMON project establishes a sovereign benchmarking framework for AI foundation models and AI applications related to the automated analysis of geoinformation critical to national security.

Learn how dida used AI in combination with depth cameras to measure cows' respiratory rates, to perform health monitoring and detect signs of advancing illness, improving health monitoring and animal welfare in dairy farming.

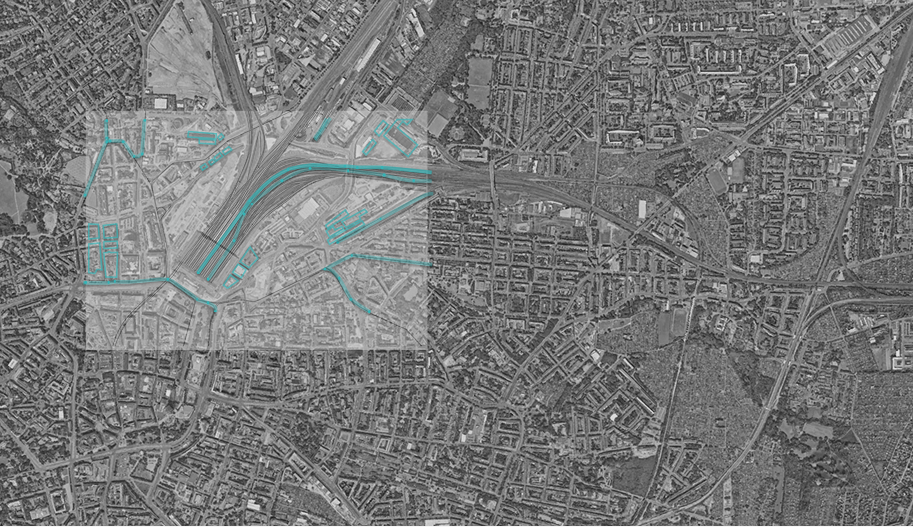

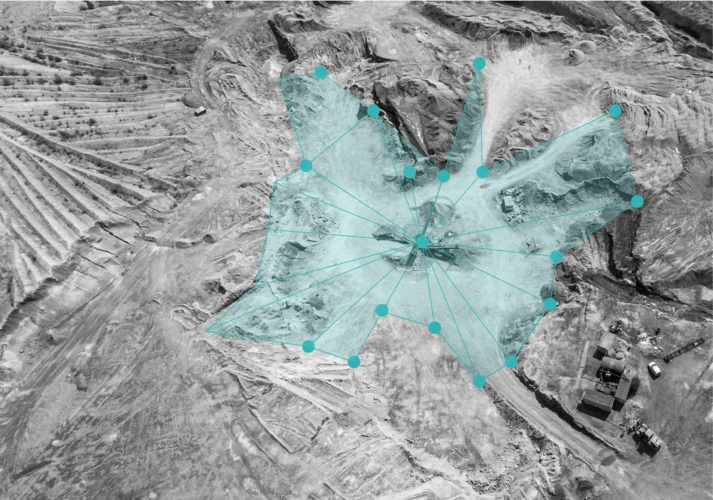

Our AmoKI project uses machine learning and geospatial data to monitor Germany’s open-pit mines, estimating depth, tracking volume changes, and detecting environmental impacts.

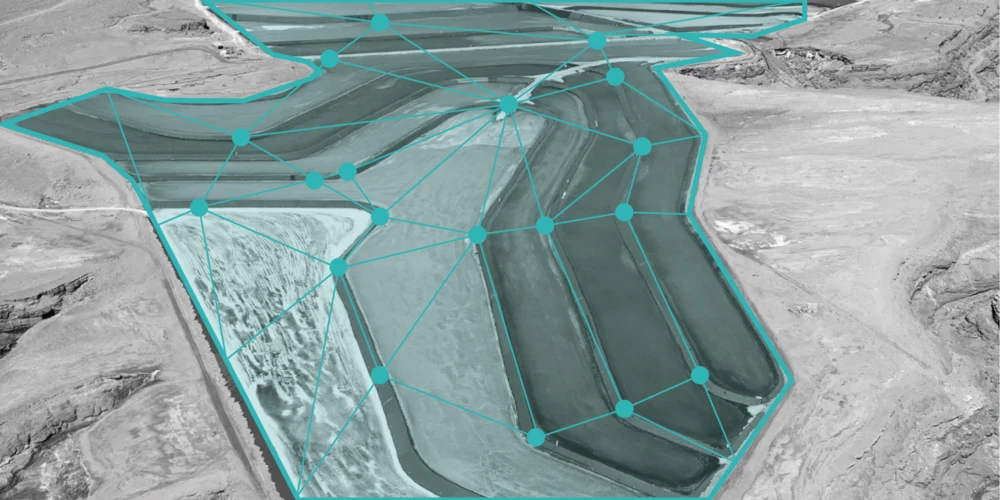

Using various satellite images, a suite of computer vision models and mathematical modeling is applied to detect, segment and analyze tailings with respect to their volume and mineralogical content.

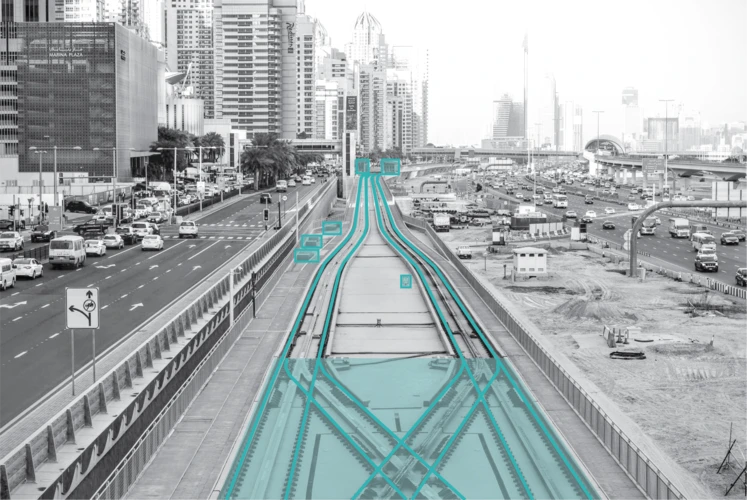

Detect anomalous objects from videos taken by cameras on a train.

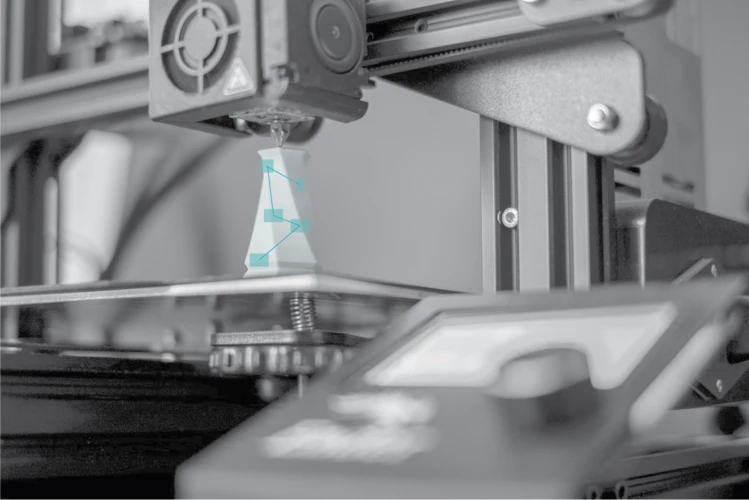

AI-based detection of nozzle contamination in industrial 3D printing

We developed a multi-level security system with facial recognition for automatic access control.

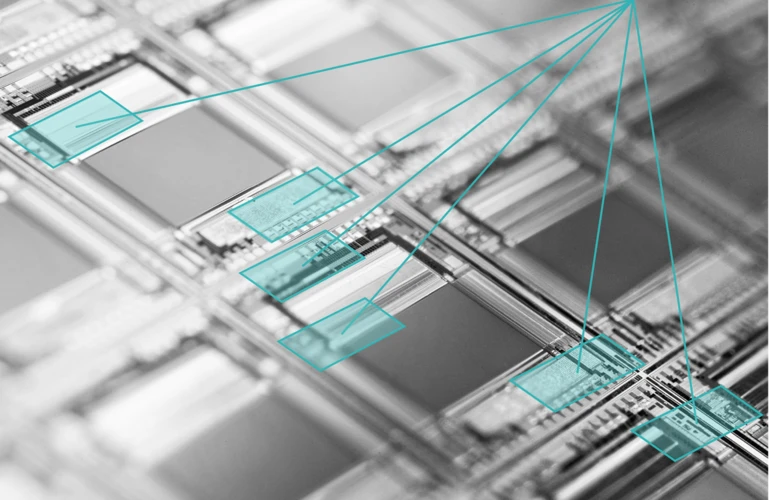

AI-supported optical defect detection for semiconductor laser production.

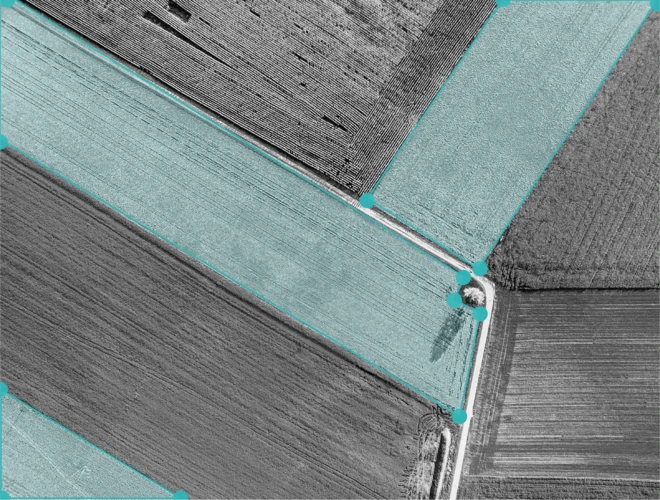

We predict crop types from satellite data to support modern agriculture.

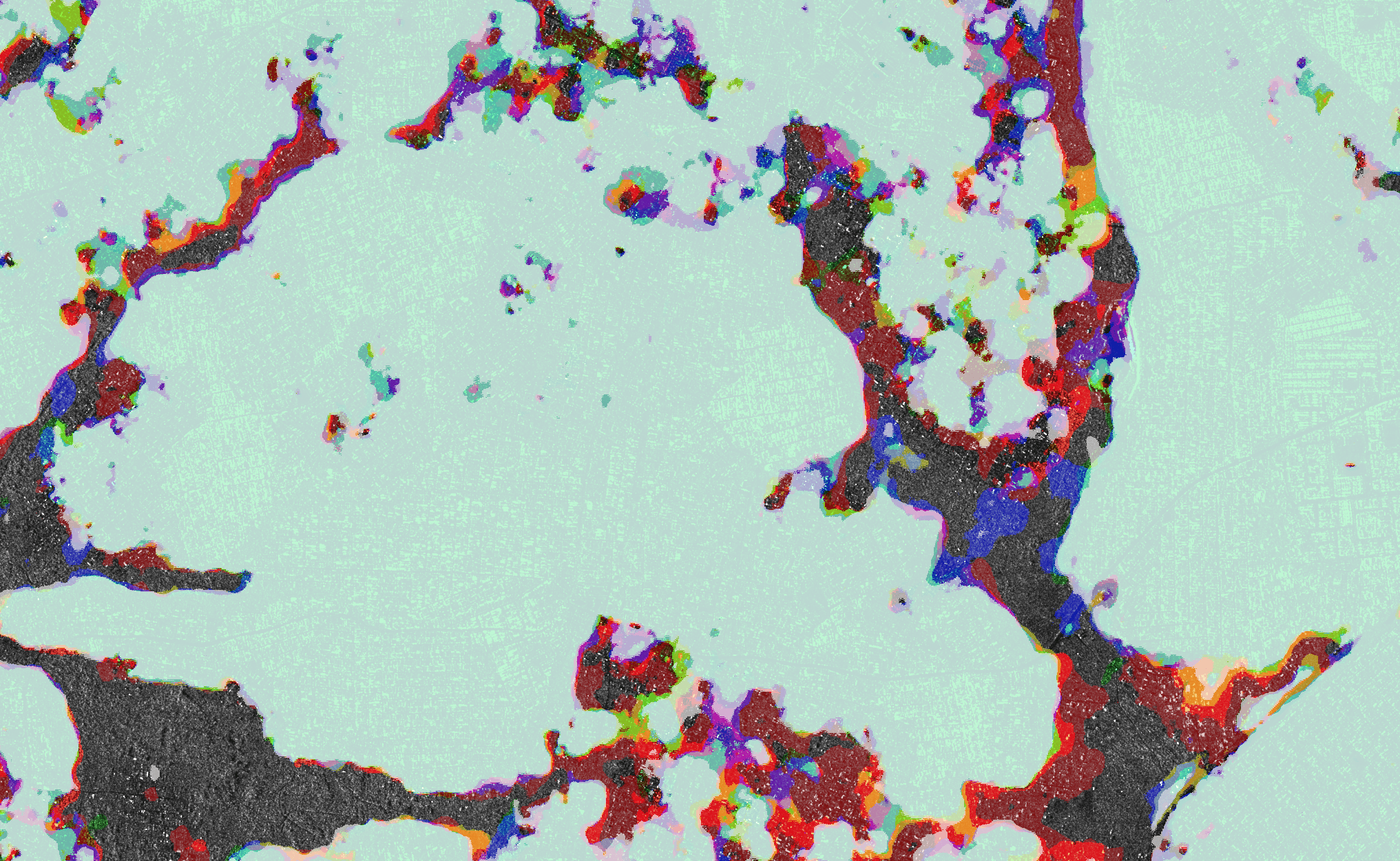

An image segmentation algorithm that supports sustainable city planning.

See how we developed a machine learning software to stop environmental destruction through illegal mines, based on satellite data and object detection.

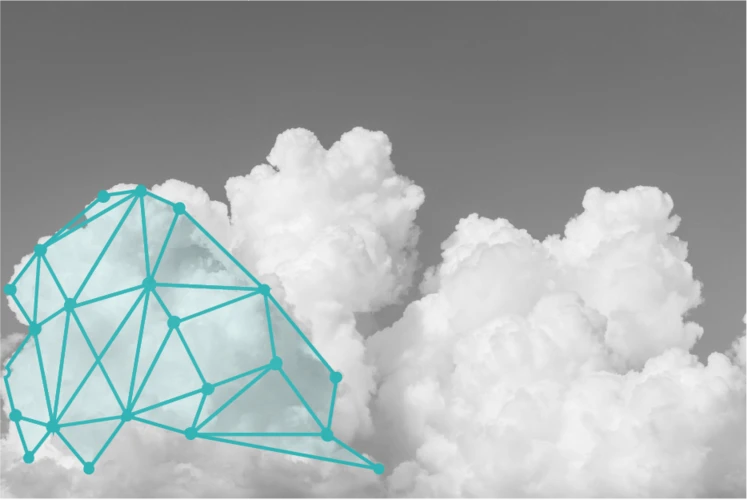

We automated the detection of certain cloud structures for Deutscher Wetterdienst (DWD).

Creative solutions enabled us to automate the process of planning solar systems.

Christof Zink, Michael Ekterai, Dominik Martin, William Clemens, Angela Maennel, Konrad Mundinger, Lorenz Richter, Paul Crump, Andrea Knigge

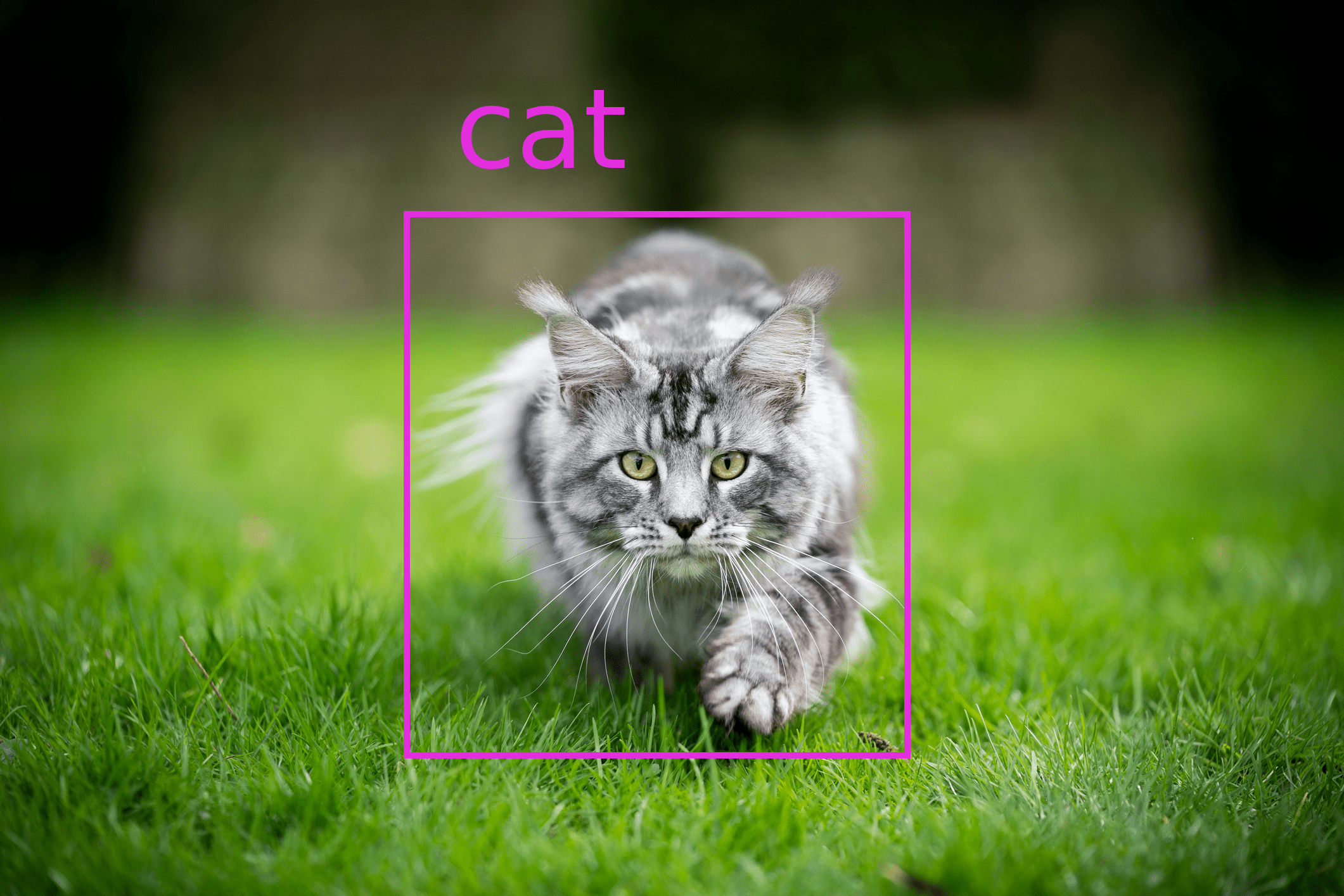

The optical inspection of the surfaces of diode lasers, especially the p-sides and facets, is an essential part of the quality control in the laser fabrication procedure. With reliable, fast, and flexible optical inspection processes, it is possible to identify and eliminate defects, accelerate device selection, reduce production costs, and shorten the cycle time for product development.

Luis Oala, Cosmas Heiß, Jan Macdonald, Maximilian März, Gitta Kutyniok, Wojciech Samek

The quantitative detection of failure modes is important for making deep neural networks reliable and usable at scale. We consider three examples for common failure modes in image reconstruction and demonstrate the potential of uncertainty quantification as a fine-grained alarm system.

Frank Weilandt, Robert Behling, Romulo Goncalves, Arash Madadi, Lorenz Richter, Tiago Sanona, Daniel Spengler, Jona Welsch

In this article, we propose a deep learning-based algorithm for the classification of crop types from Sentinel-1 and Sentinel-2 time series data which is based on the celebrated transformer architecture. Crucially, we enable our algorithm to do early classification, i.e., predict crop types at arbitrary time points early in the year with a single trained model (progressive intra-season classification). Such early season predictions are of practical relevance for instance for yield forecasts or the modeling of agricultural water balances, therefore being important for the public as well as the private sector. Furthermore, we improve the mechanism of combining different data sources for the prediction task, allowing for both optical and radar data as inputs (multi-modal data fusion) without the need for temporal interpolation. We can demonstrate the effectiveness of our approach on an extensive data set from three federal states of Germany reaching an average F1 score of 0.920.92 using data of a complete growing season to predict the eight most important crop types and an F1 score above 0.80.8 when doing early classification at least one month before harvest time. In carefully chosen experiments, we can show that our model generalizes well in time and space.

Theophil Trippe, Martin Genzel, Jan Macdonald, Maximilian März

This work presents a novel deep-learning-based pipeline for the inverse problem of image deblurring, leveraging augmentation and pre-training with synthetic data. Our results build on our winning submission to the recent Helsinki Deblur Challenge 2021, whose goal was to explore the limits of state-of-the-art deblurring algorithms in a real-world data setting.

Jan Macdonald, Lars Ruthotto

We present an improved technique for susceptibility artifact correction in echo-planar imaging (EPI), a widely used ultra-fast magnetic resonance imaging (MRI) technique. Our method corrects geometric deformations and intensity modulations present in EPI images.

Jan Macdonald, Maximilian März, Luis Oala, Wojciech Samek

This work investigates the detection of instabilities that may occur when utilizing deep learning models for image reconstruction tasks. Although neural networks often empirically outperform traditional reconstruction methods, their usage for sensitive medical applications remains controversial.

Martin Genzel, Ingo Gühring, Jan Macdonald, Maximilian März

This work is concerned with the following fundamental question in scientific machine learning: Can deep-learning-based methods solve noise-free inverse problems to near-perfect accuracy? Positive evidence is provided for the first time, focusing on a prototypical computed tomography (CT) setup.

Martin Genzel, Jan Macdonald, Maximilian März

In the past five years, deep learning methods have become state-of-the-art in solving various inverse problems. Before such approaches can find application in safety-critical fields, a verification of their reliability appears mandatory. Recent works have pointed out instabilities of deep neural networks for several image reconstruction tasks.

.png)

Fabian Dechent

May 23rd, 2025

.png)

Ma Li (PhD)

May 23rd, 2025

.png)

Jan Macdonald (PhD)

May 31st, 2024

.png)

Maximilian Trescher (PhD)

May 31st, 2024

.png)

William Clemens (PhD)

February 26th, 2020

.png)

William Clemens (PhD)

May 11th, 2022

.png)

Moritz Besser and Jona Welsch

December 6th, 2021