Background

Pilots receive a weather prediction called a METAR report describing conditions at the destination airport. Currently, this is produced manually by observations from trained meteorologists on the ground but there is an effort by the DWD to automate as much as possible to free up the meteorologists’ time for other tasks. We will be focused on the section of this report that concerns convective clouds.

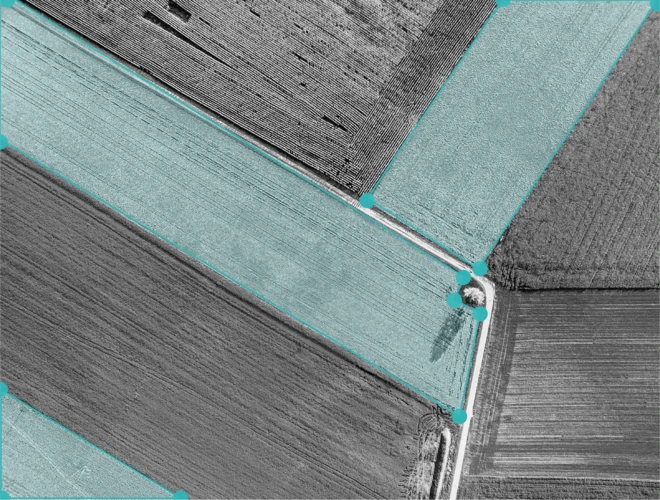

In order for an automated system to be reliable and trusted it must be multiply redundant and so models must be based on different data sources. Algorithms using radar and lightning data already exist and our goal here was to provide an independent model, based on Meteosat Second Generation (MSG) data, to support these. As such the machine learning component of the system is a semantic segmentation of the satellite data into three classes: CB, TCU or neither.

Challenges

Labelling here was a significant challenge. The signs of convective clouds are extremely subtle and it is difficult for even a trained human to recognise them in the data.

In addition, there were also a number of technical challenges regarding the input data: The satellite orbits above the equator and takes an image of the entire earth disk. This puts it at an angle of ~20° above normal. As a result, the image must be geometrically transformed to give the correct perspective.

In addition, we have 12 channels: 3 visual, which are all different wavelengths of red rather than RGB, and 9 infrared channels. One of the visual channels, the High Resolution Visual (HRV) channel has three times the resolution of the others. This presents us with a choice, we can downsample the HRV channel or upsample the others. We chose to upsample the remaining channels to ensure that the fine structure in the HRV channel is preserved.

Our Solution

The labelling process was conducted using a composite image of the HRV channel together with one of the infrared channels that made the relevant clouds stand out more. However, even this is not sufficient to make a judgement in all cases so external data in the form of radar and ground observations were also consulted in order to label correctly. Additionally, a trained meteorologist reviewed all labels to ensure that they were correct.

The model itself is a U-Net implemented in the PyTorch framework and trained using the Adam optimiser with a one cycle learning rate annealing scheduler.

Extensive data augmentation was also utilised to get the most from our dataset.

Technologies used

Backend: Python, PyTorch, SatPy, OpenCV, Numpy

Infrastructure: GCloud (Training), Git, nevergrad, tensorboard