Defect Detection in Manufacturing

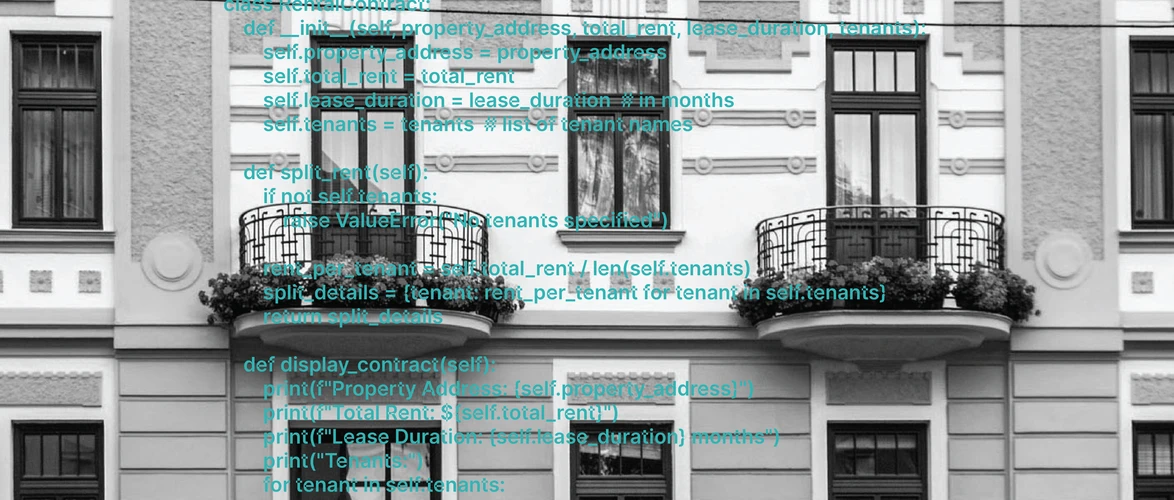

AI-supported optical defect detection for semiconductor laser production.

Input

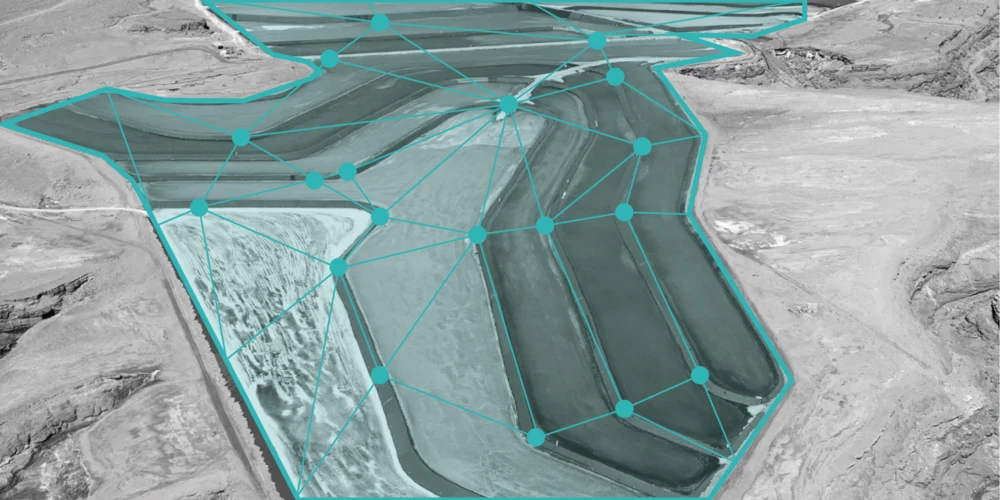

Images of semiconductors

Output

Type and location of defects

Goal

Increase the efficiency of the defect inspection