The best (Python) tools for remote sensing

Emilius Richter

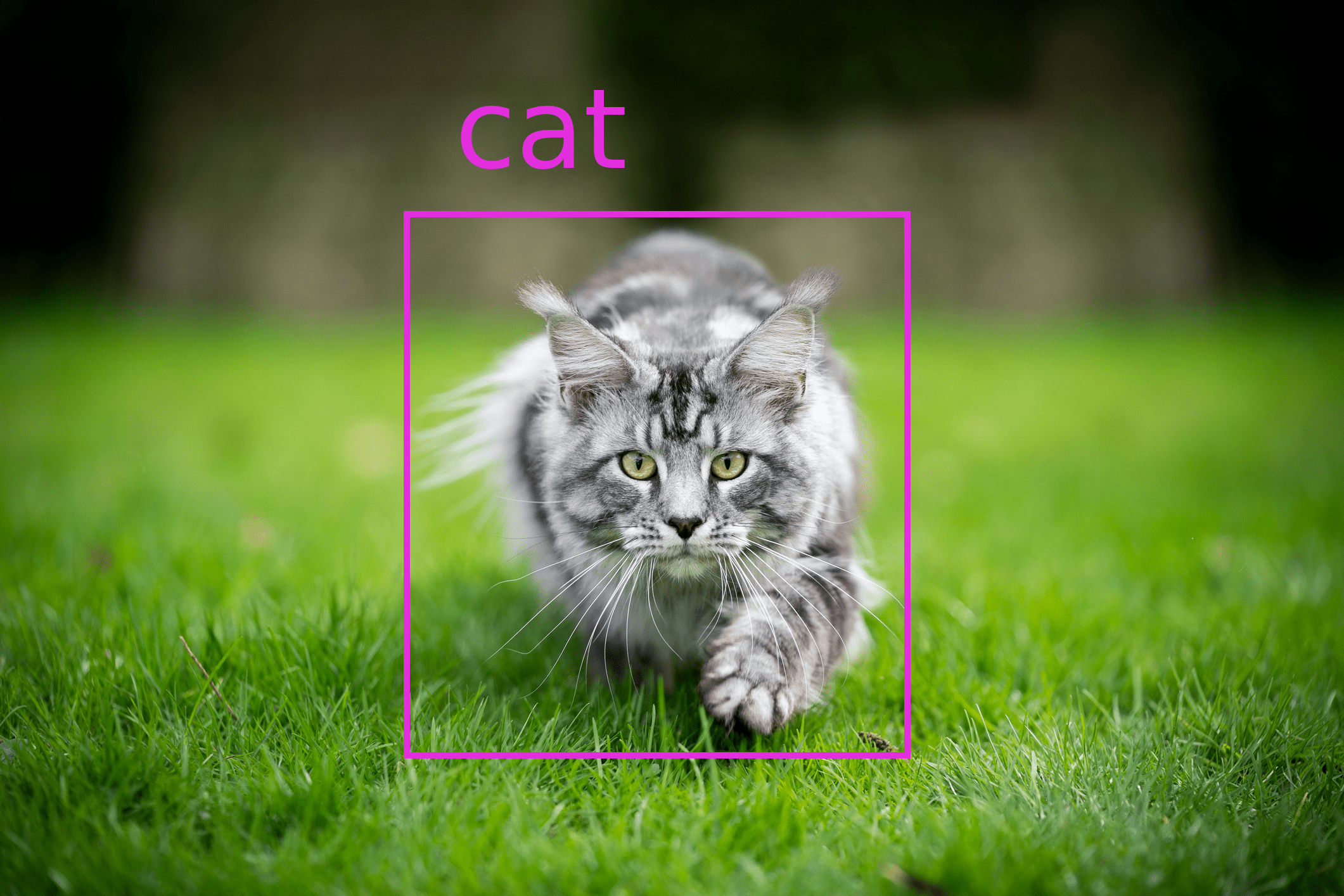

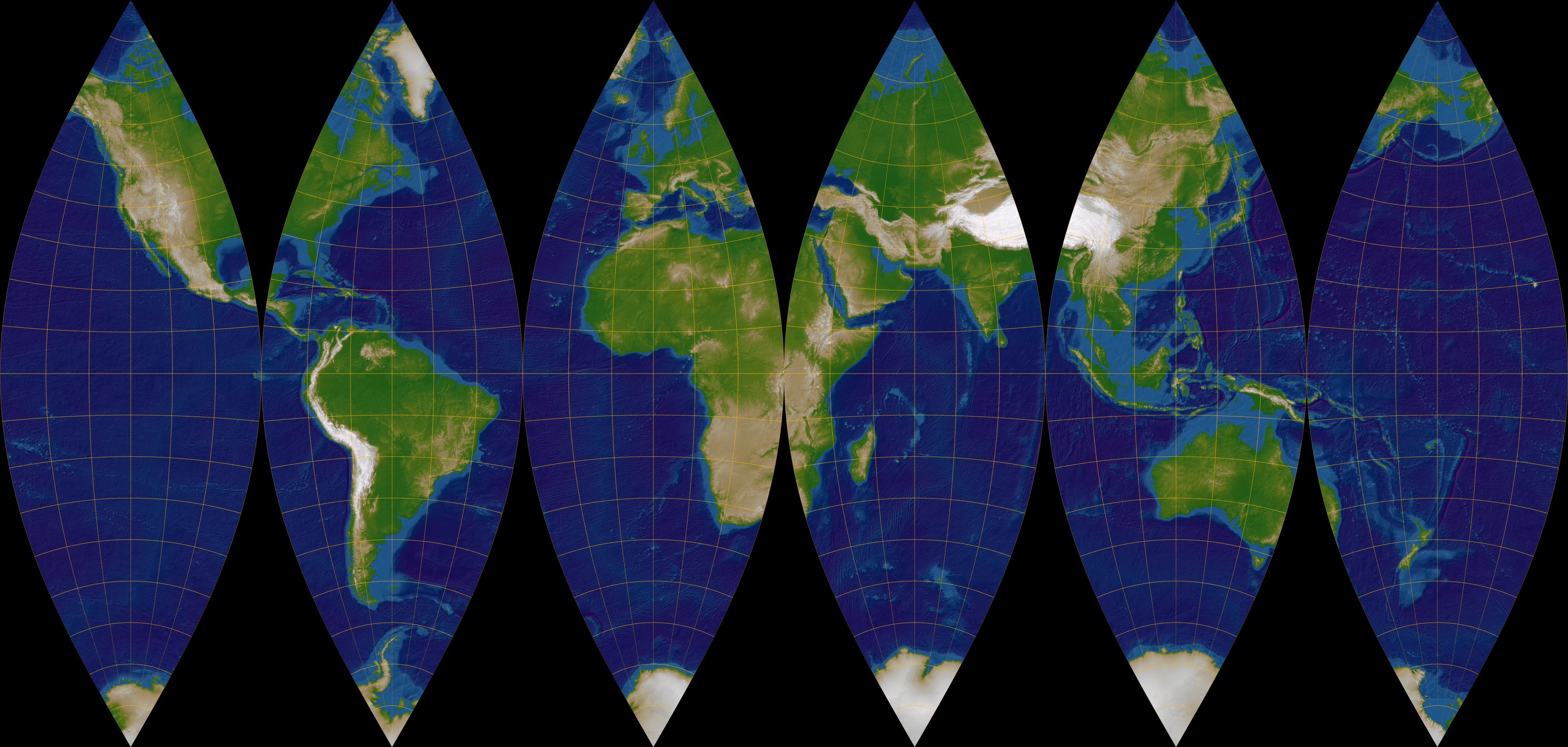

An estimated number of 906 Earth observation satellites are currently in orbit, providing science and industry with many terabytes of data every day. The satellites operate with both radar as well as optical sensors and cover different spectral ranges with varying spectral, spatial, and temporal resolutions. Due to this broad spectrum of geospatial data, it is possible to find new applications for remote sensing methods in many industrial and governmental institutions. On our website, you can find some projects in which we have successfully used satellite data and possible use cases of remote sensing methods for various industries . Well-known satellite systems and programs include Sentinel-1 (radar) and Sentinel-2 (optical) from ESA, Landsat (optical) from NASA, TerraSAR-X and TanDEM-X (both radar) from DLR, and PlanetScope (optical) from Planet. There are basically two types of geospatial data: raster data and vector data . Raster data Raster data are a grid of regularly spaced pixels, where each pixel is associated with a geographic location, and are represented as a matrix. The pixel values depend on the type of information that is stored, e.g., brightness values for digital images or temperature values for thermal images. The size of the pixels also determines the spatial resolution of the raster. Geospatial raster data are thus used to represent satellite imagery. Raster images usually contain several bands or channels, e.g. a red, green, and blue channel. In satellite data, there are also often infrared and/or ultraviolet bands. Vector data Vector data represent geographic features on the earth's surface, such as cities, country borders, roads, bodies of water, property rights, etc.. Such features are represented by one or more connected vertices, where a vertex defines a position in space by x-, y- and z-values. A single vertex is a point, multiple connected vertices are a line, and multiple (>3) connected and closed vertices are called polygons. The x-, y-, and z-values are always related to the corresponding coordinate reference system (CRS) that is stored in vector files as meta information. The most common file formats for vector data are GeoJSON, KML, and SHAPEFILE. In order to process and analyze these data, various tools are required. In the following, I will present the tools we at dida have had the best experience with and which are regularly used in our remote sensing projects. I present the tools one by one, grouped into the following sections: Requesting satellite data EOBrowser Sentinelsat Sentinelhub Processing raster data Rasterio Pyproj SNAP pyroSAR Rioxarray Processing vector data Shapely Python-geojson Geojson.io Geopandas Fiona Providing geospatial data QGIS GeoServer Leafmap Processing meteorological satellite data Wetterdienst Wradlib