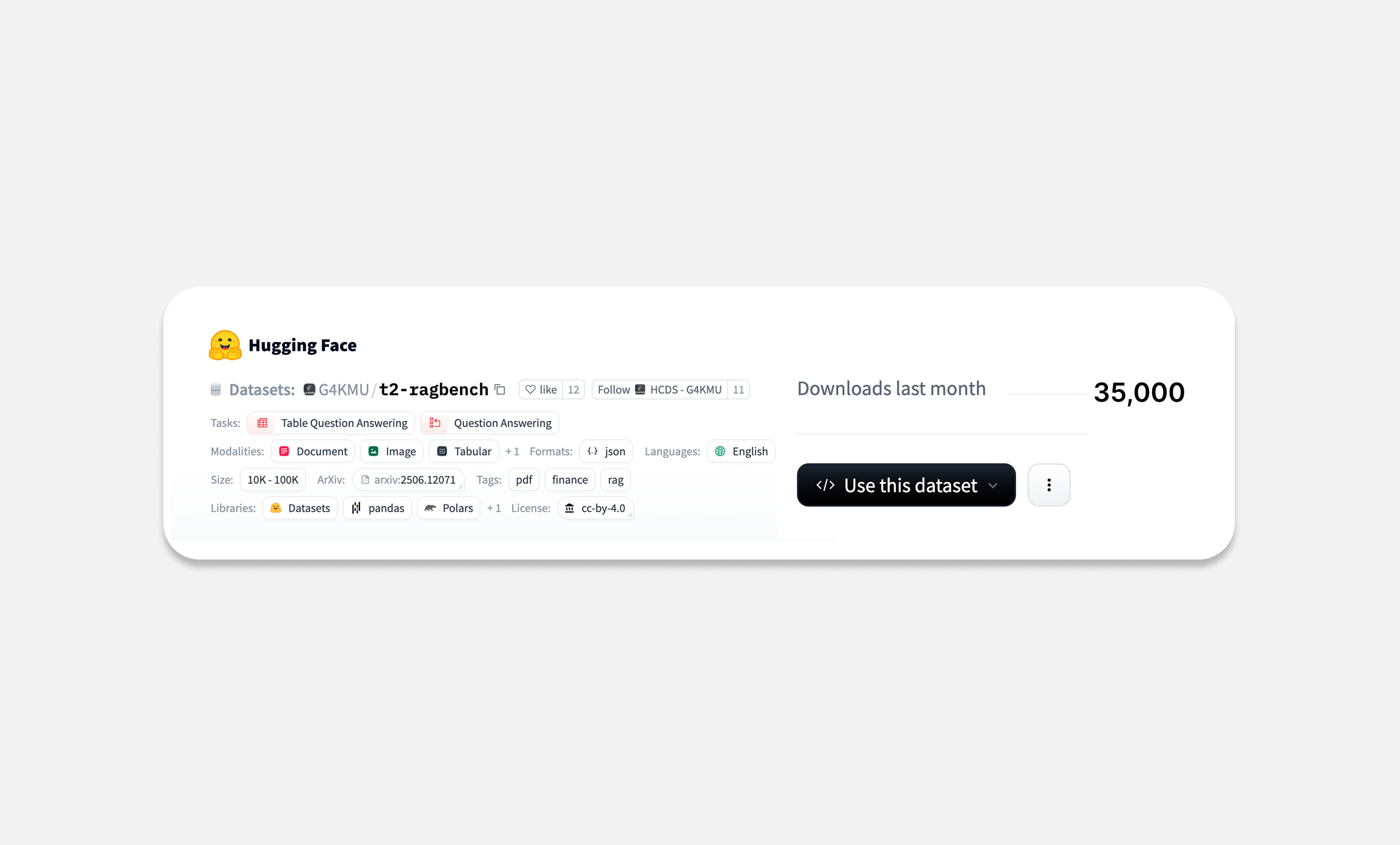

35,000+ monthly downloads for our RAG evaluation benchmark dataset T²-RAGBench

Our new benchmark dataset for RAG systems, T²-RAGBench, has seen a significant surge in adoption within the machine learning community, with currently more than 35,000 monthly downloads. Developed in collaboration with University of Hamburg’s Hub of Computing and Data Science (HCDS), the dataset addresses a critical gap in the evaluation of AI systems tasked with analyzing complex financial data.

Solving the problem of retrieval ambiguity

Standard Question-Answering (QA) datasets often operate in oracle settings, where the AI is provided with the correct context upfront. In these environments, vague queries such as "What was the net cash flow?" are functional because the scope is narrow. However, in production-grade RAG systems managing thousands of documents, such ambiguity leads to retrieval noise. Multiple documents may appear relevant, as they state cash flow values, making it impossible for developers to verify retrieval accuracy.

T²-RAGBench introduces context-independent questions that specify entities, dates, and metrics, ensuring each query points to exactly one ground-truth document.

Dataset characteristics and methodology

The dataset, developed as part of the GENIAL4KMU project, incorporates several technical attributes engineered for the rigorous testing of modern AI. At its foundation is a substantial corpus of 23,088 QA pairs mapped across more than 7,300 real-world financial reports, providing the scale necessary for statistically significant benchmarking.

To address common failures in information retrieval, the team applied precision engineering to the data; ambiguous user queries were systematically reformulated into self-contained, precise questions. This ensures that retrieval can be verified against a single, specific ground-truth document rather than a cluster of superficially relevant results.

Furthermore, the benchmark introduces multimodal complexity by requiring models to perform sophisticated numerical reasoning. Unlike datasets that focus solely on prose, T²-RAGBench challenges systems to synthesize information found in both unstructured narrative text and complex Markdown tables. Early baseline findings from the research team highlight the difficulty of this task, identifying Hybrid BM25—a combination of keyword and semantic search—as the most effective retrieval strategy for navigating this specific financial data format.

Collaboration

The project represents a successful bridge between industry and academia. Key contributors to the initiative include Jan Strich, Enes Kutay İşgörür, Dr. Maximilian Trescher, Dr. Chris Biemann, and Dr. Martin Semmann. The research team has made the resources publicly available to foster further innovation in the field ofLLMs and information retrieval.

Technical Resources:

- Dataset Access: Available via Hugging Face

- Performance Tracking: An interactive leaderboard is available for researchers to compare model benchmarks.