Underdamped Diffusion Bridges with Applications to Sampling

by Denis Blessing, Julius Berner, Lorenz Richter, Gerhard Neumann

Year:

2025

Publication:

International Conference on Learning Representations (ICLR)

Abstract:

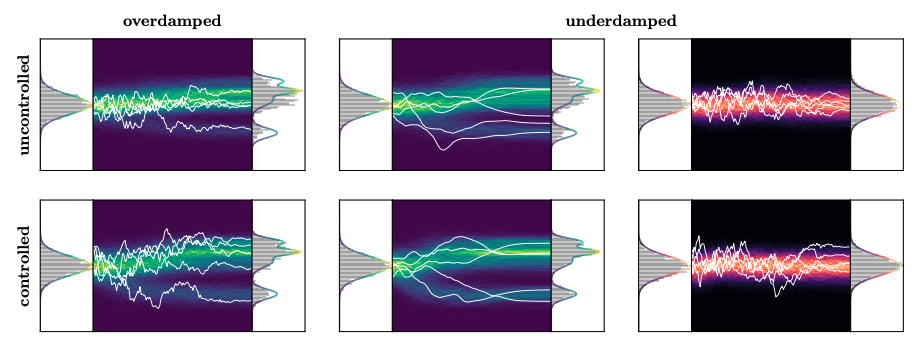

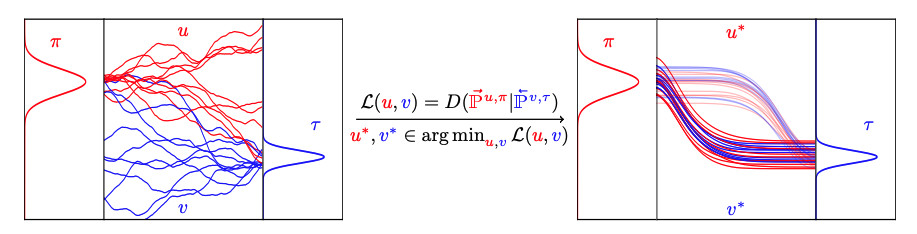

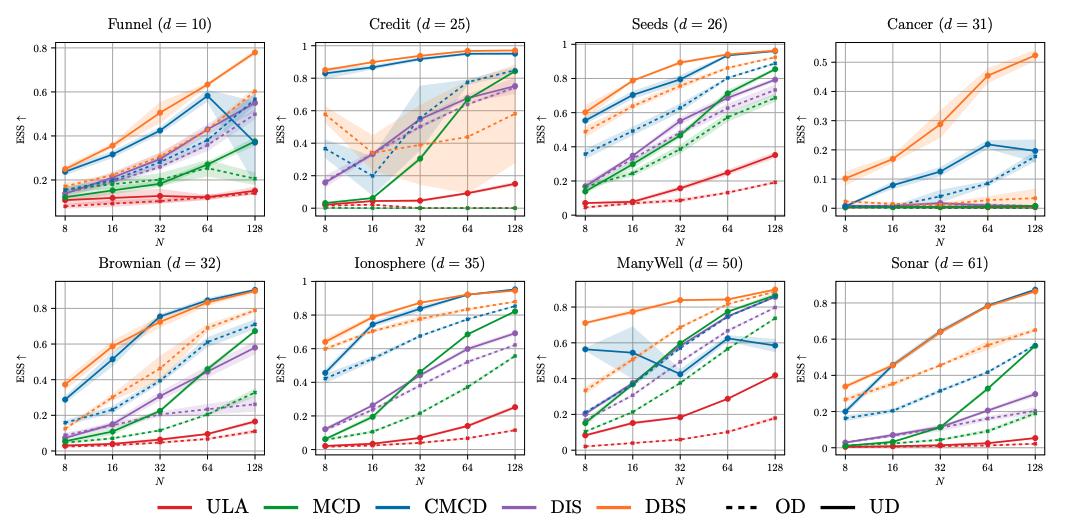

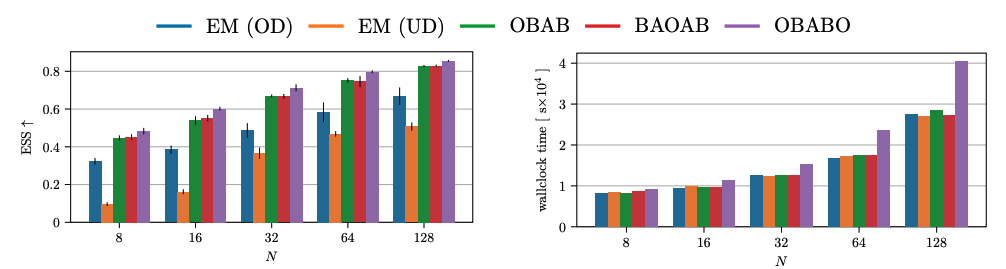

We provide a general framework for learning diffusion bridges that transport prior to target distributions. It includes existing diffusion models for generative modeling, but also underdamped versions with degenerate diffusion matrices, where the noise only acts in certain dimensions. Extending previous findings, our framework allows to rigorously show that score matching in the underdamped case is indeed equivalent to maximizing a lower bound on the likelihood. Motivated by superior convergence properties and compatibility with sophisticated numerical integration schemes of underdamped stochastic processes, we propose underdamped diffusion bridges, where a general density evolution is learned rather than prescribed by a fixed noising process. We apply our method to the challenging task of sampling from unnormalized densities without access to samples from the target distribution. Across a diverse range of sampling problems, our approach demonstrates state-of-the-art performance, notably outperforming alternative methods, while requiring significantly fewer discretization steps and no hyperparameter tuning.

Link:

Read the paperAdditional Information

Brief introduction of the dida co-author(s) and relevance for dida's ML developments.

Lorenz Richter (PhD)

With an original focus on stochastics and numerics (FU Berlin), the mathematician has been dealing with deep learning algorithms for some time now. Besides his interest in the theory, he has practically solved multiple data science problems in the last 10 years. Lorenz leads the machine learning team.