From Discrete-Time Policies to Continuous-Time Diffusion Samplers: Asymptotic Equivalences and Faster Training

by Julius Berner, Lorenz Richter, Marcin Sendera, Jarrid Rector-Brooks, Nikolay Malkin

Year:

2025

Publication:

arXiv preprint arXiv:2501.06148

Abstract:

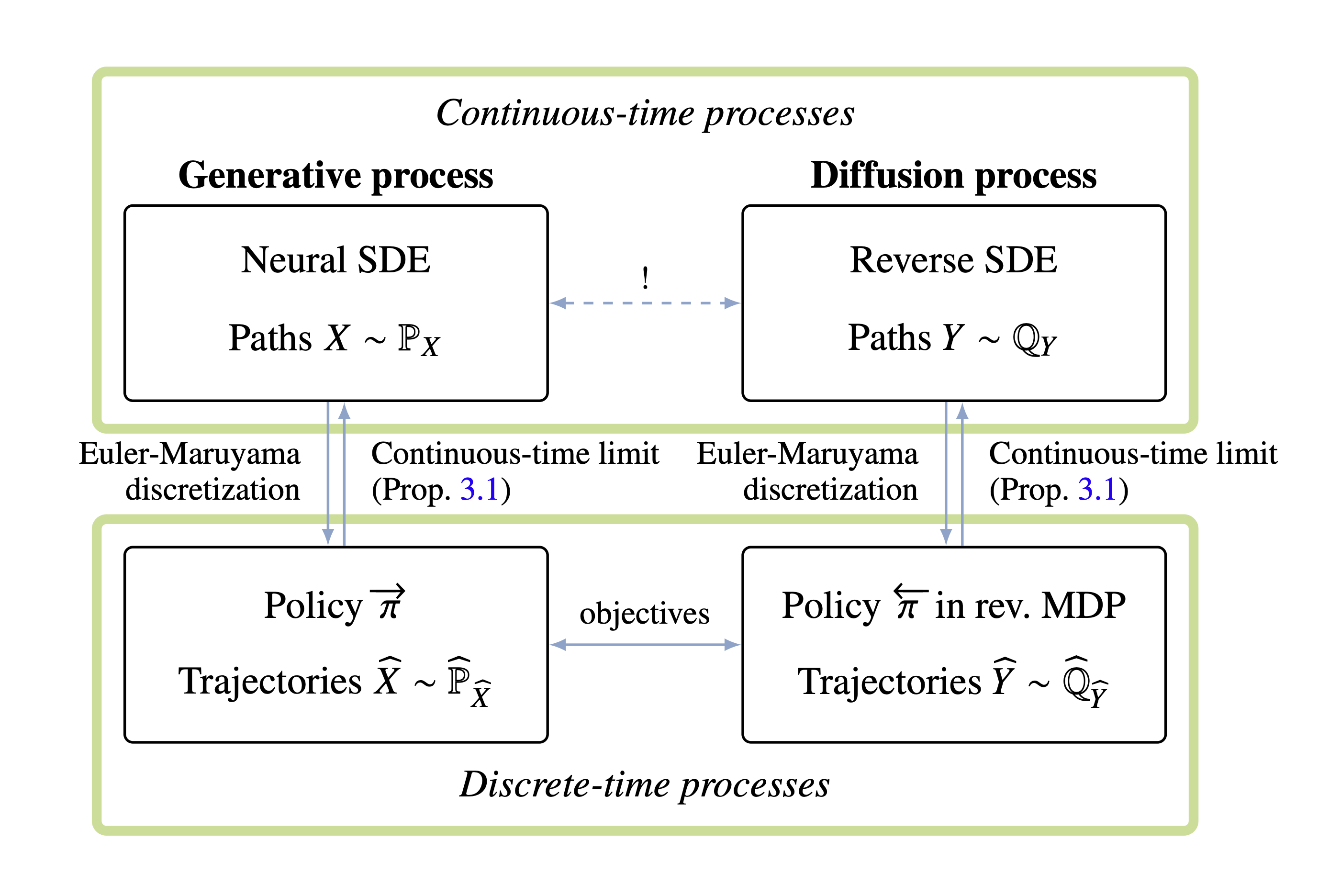

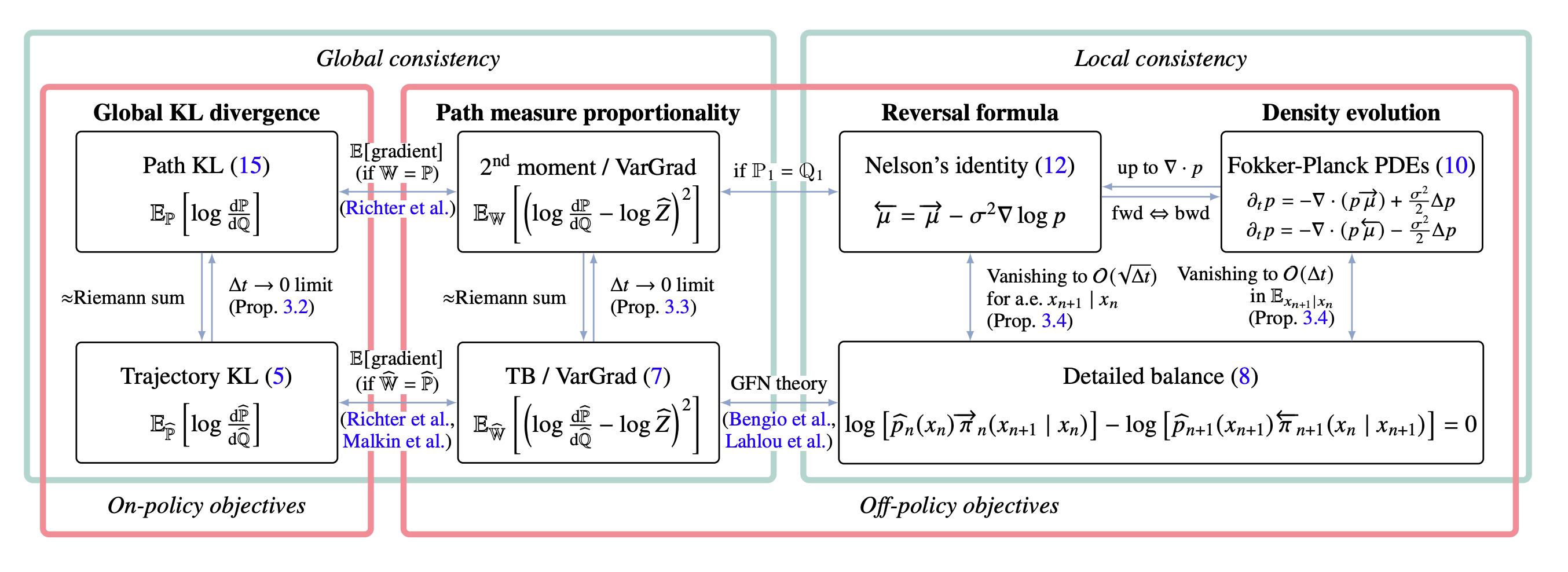

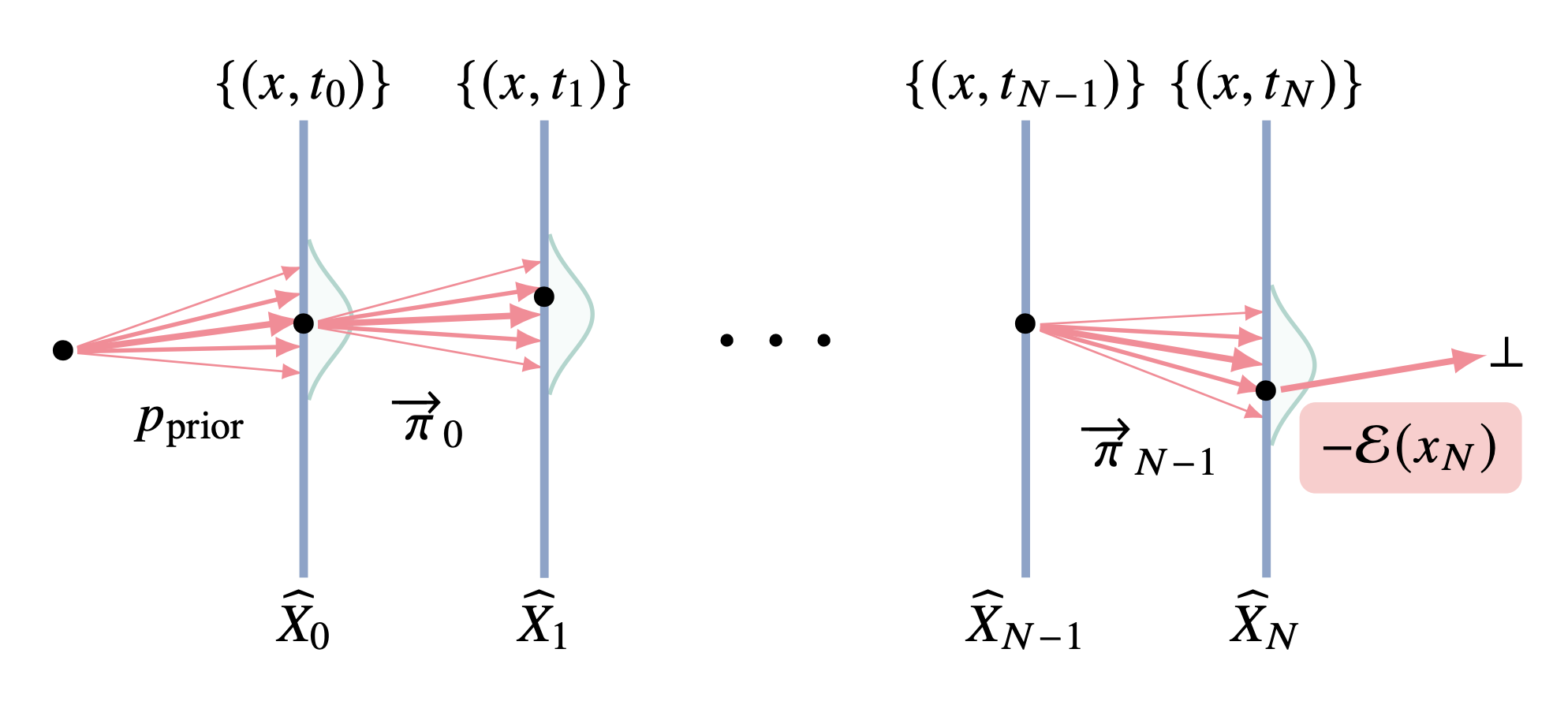

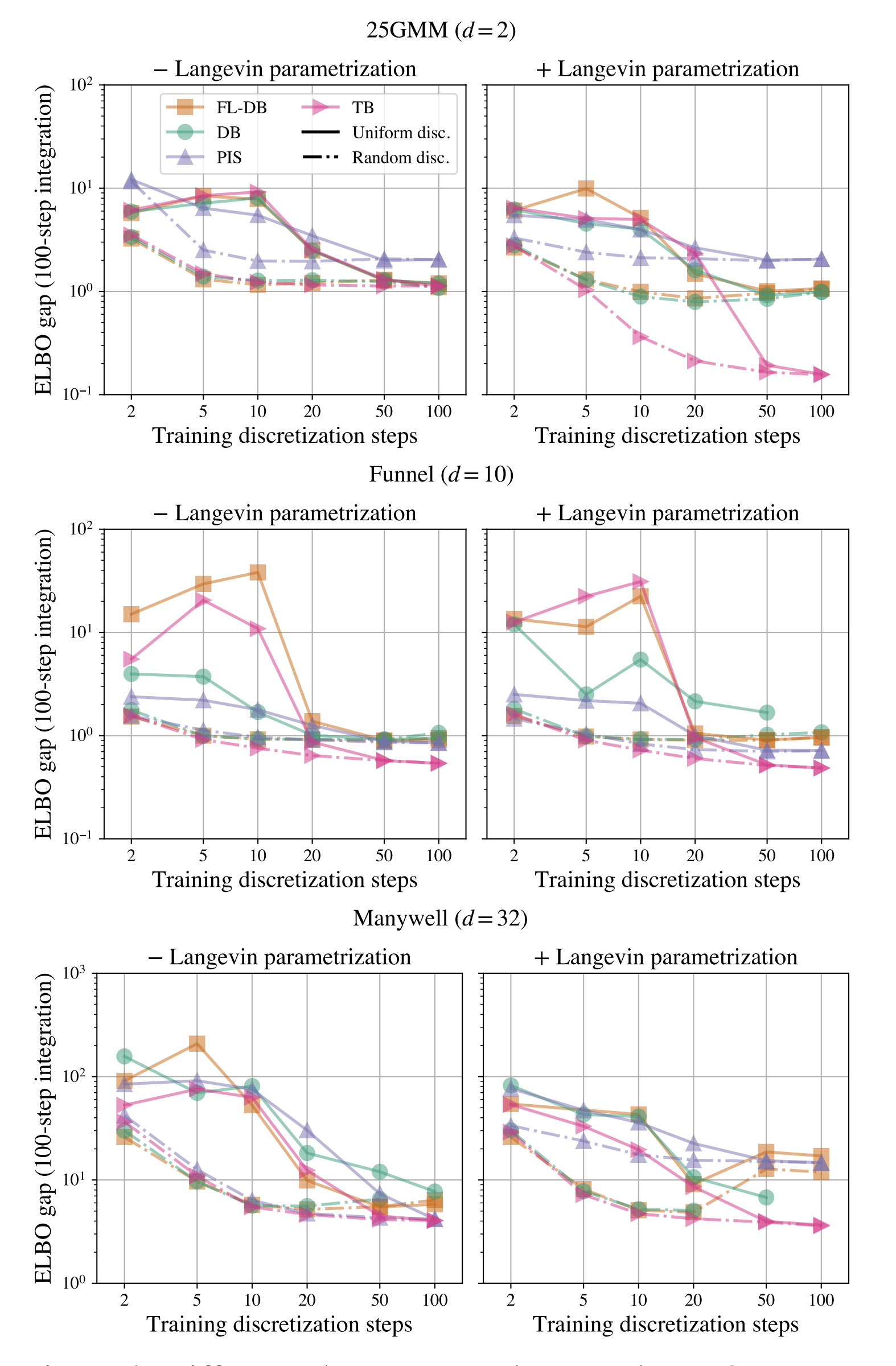

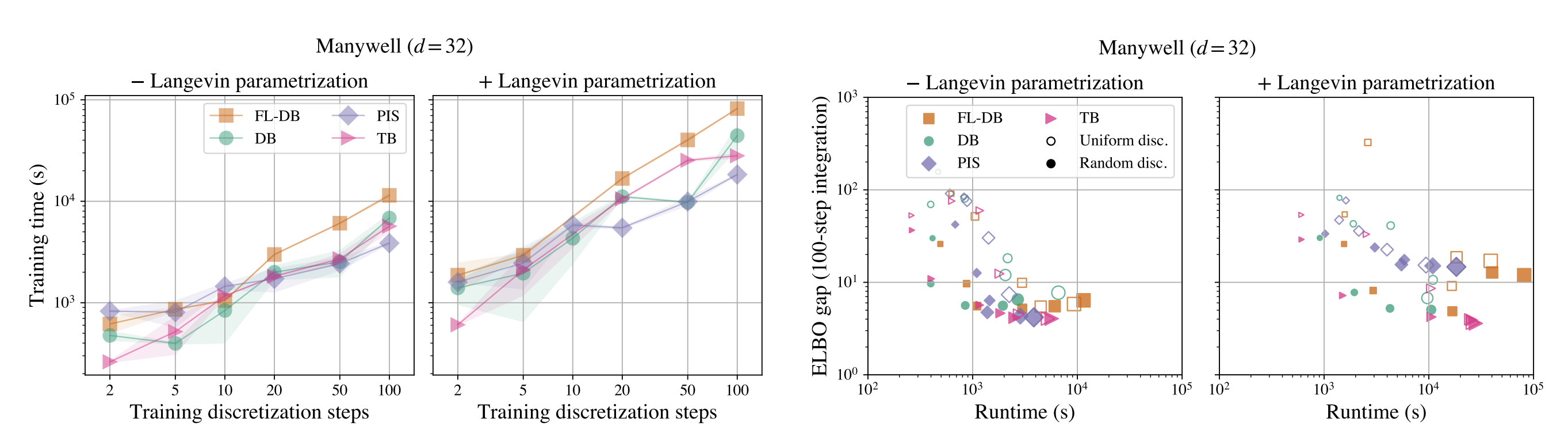

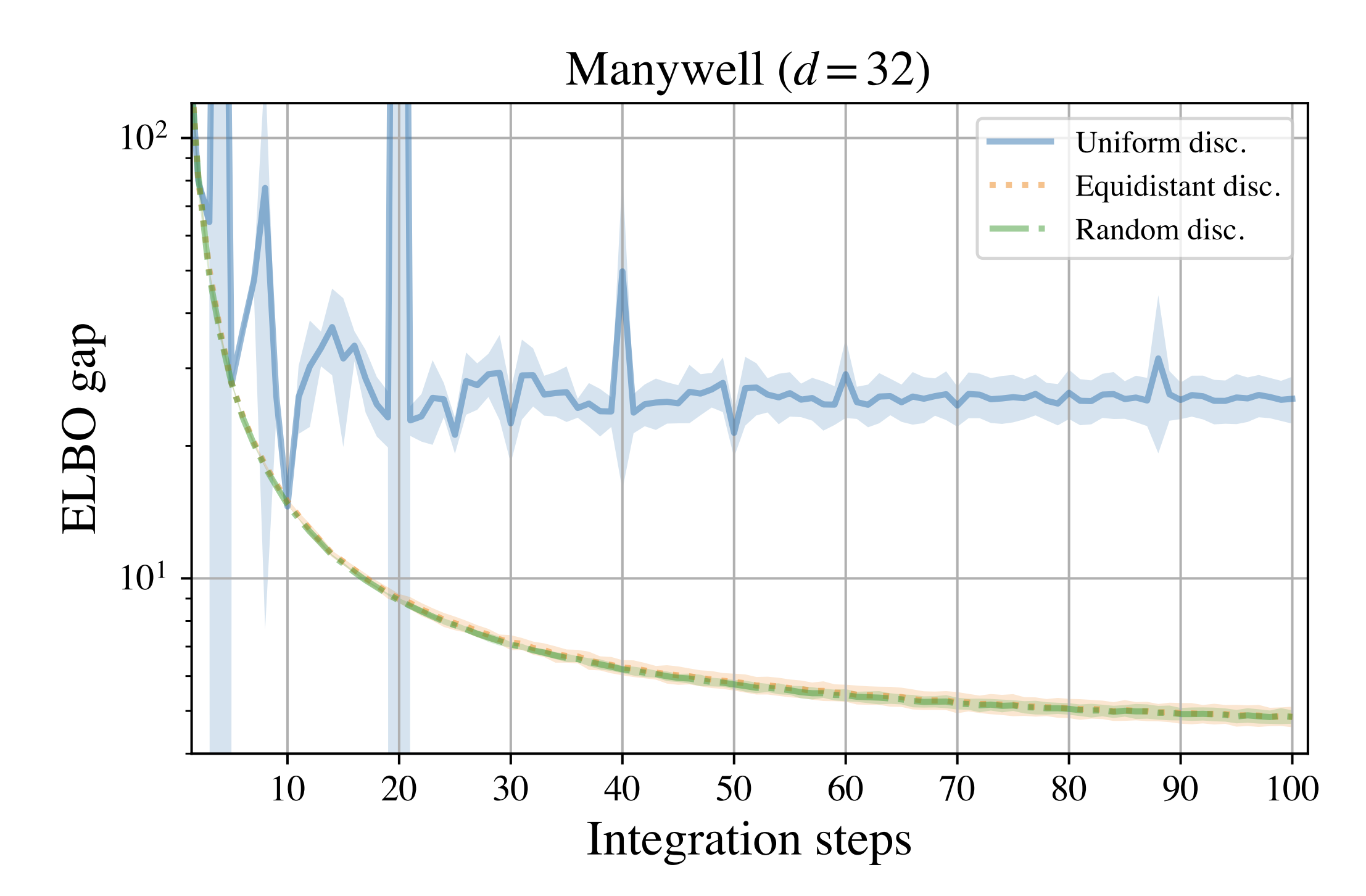

We study the problem of training neural stochastic differential equations, or diffusion models, to sample from a Boltzmann distribution without access to target samples. Existing methods for training such models enforce time-reversal of the generative and noising processes, using either differentiable simulation or off-policy reinforcement learning (RL). We prove equivalences between families of objectives in the limit of infinitesimal discretization steps, linking entropic RL methods (GFlowNets) with continuous-time objects (partial differential equations and path space measures). We further show that an appropriate choice of coarse time discretization during training allows greatly improved sample efficiency and the use of time-local objectives, achieving competitive performance on standard sampling benchmarks with reduced computational cost.

Link:

Read the paperAdditional Information

Brief introduction of the dida co-author(s) and relevance for dida's ML developments.

Lorenz Richter (PhD)

With an original focus on stochastics and numerics (FU Berlin), the mathematician has been dealing with deep learning algorithms for some time now. Besides his interest in the theory, he has practically solved multiple data science problems in the last 10 years. Lorenz leads the machine learning team.