Recap of ICML 2025

Let’s recap this year’s International Conference on Machine Learning (ICML), which took place in Vancouver, Canada.

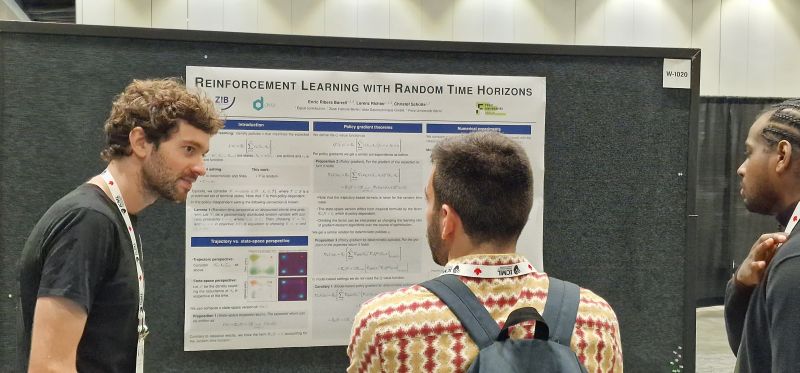

We are happy to once again have been part of the ICML and, in this case, we are proud to have presented our first paper related to reinforcement learning (RL). In joint collaboration with Enric Ribera Borrell and Christof Schütte, we discussed our paper “Reinforcement Learning with Random Time Horizons”.

In our work, we could extend RL to settings with random, policy-dependent time horizons by rigorously deriving new policy gradient formulas - both stochastic and deterministic - and show that these improve optimization convergence.

Apart from our presentation, we mixed and mingled with other international researchers. The most dominant topics at the conference this year could be clustered to the following:

(1) LLMs & Agents, (2) Diffusion Models, (3) Interpretability & Alignment, (4) Optimization & Scaling Laws, and (5) Fairness

It once again was a truly great atmosphere - another reason why we like to share our research on an international stage.

Link to our paper: https://arxiv.org/pdf/2506.00962