Reinforcement Learning with Random Time Horizons

von Enric Ribera Borrell, Lorenz Richter, Christof Schütte

Jahr:

2025

Publikation:

International Conference on Machine Learning (ICML)

Abstrakt:

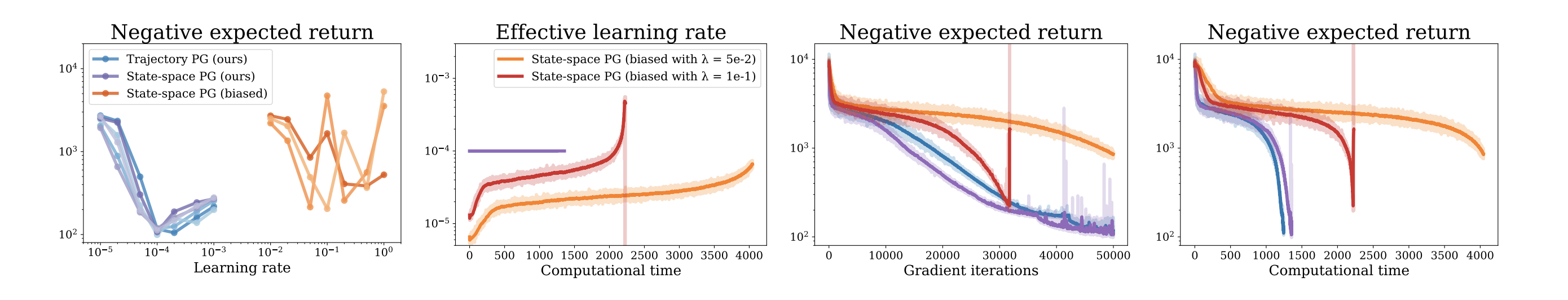

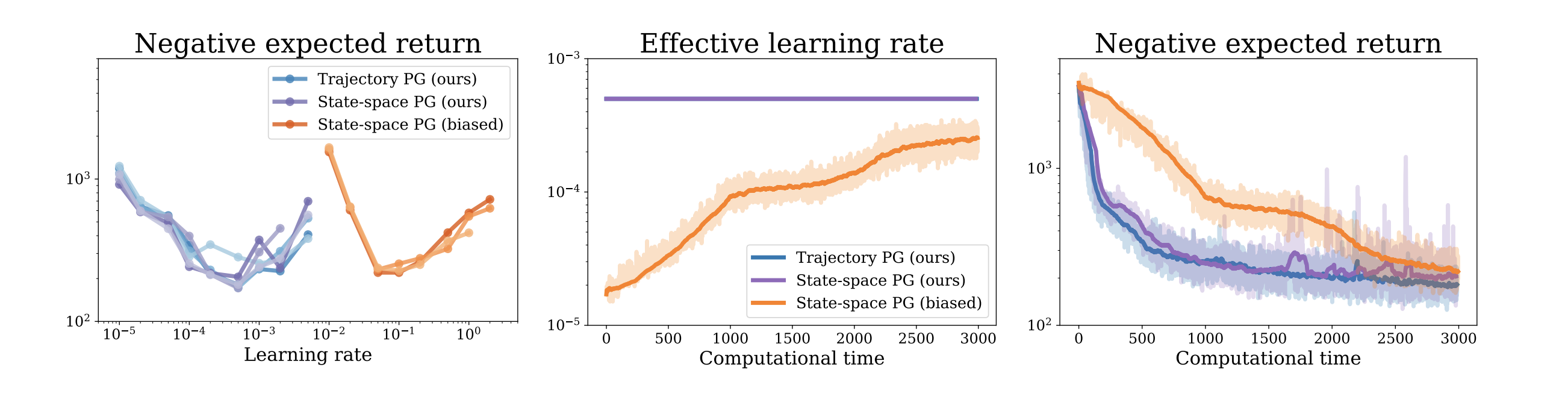

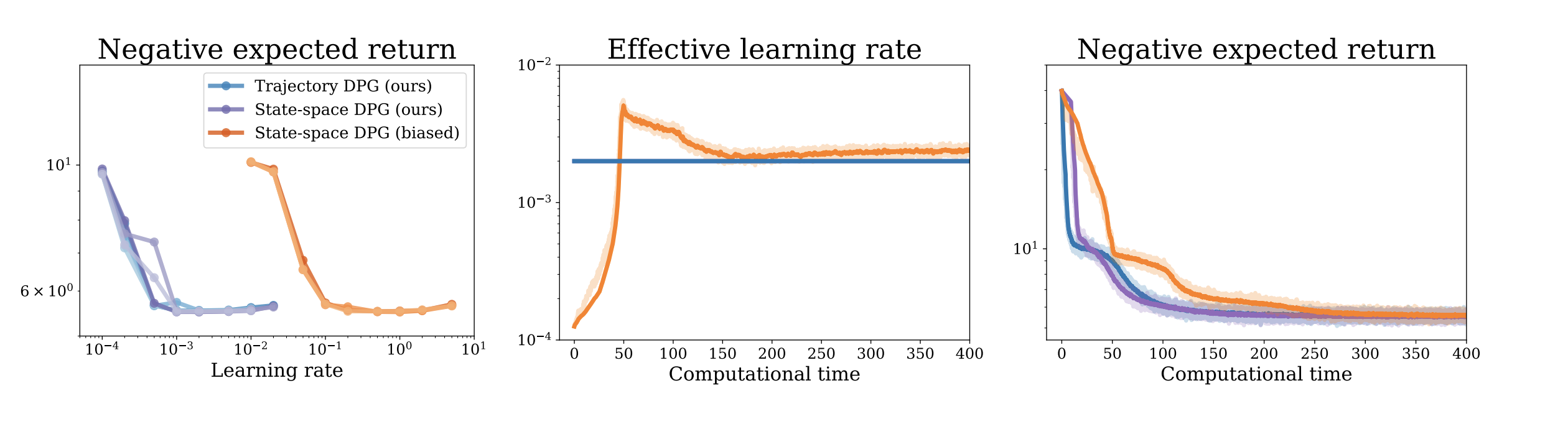

We extend the standard reinforcement learning framework to random time horizons. While the classical setting typically assumes finite and deterministic or infinite runtimes of trajectories, we argue that multiple real-world applications naturally exhibit random (potentially trajectory-dependent) stopping times. Since those stopping times typically depend on the policy, their randomness has an effect on policy gradient formulas, which we (mostly for the first time) derive rigorously in this work both for stochastic and deterministic policies. We present two complementary perspectives, trajectory or state-space based, and establish connections to optimal control theory. Our numerical experiments demonstrate that using the proposed formulas can significantly improve optimization convergence compared to traditional approaches.

Link:

Read the paperAdditional Information

Brief introduction of the dida co-author(s) and relevance for dida's ML developments.

Dr. Lorenz Richter

Aus der Stochastik und Numerik kommend (FU Berlin), beschäftigt sich der Mathematiker seit einigen Jahren mit Deep-Learning-Algorithmen. Neben seinem Faible für die Theorie hat er in den letzten 10 Jahren diverse Data Science-Probleme praktisch gelöst. Lorenz leitet das Machine-Learning-Team.